Yann Neuhaus

Another file system for Linux: bcachefs (2) – multi device file systems

In the last post, we’ve looked at the very basics when it comes to bcachefs, a new file system which was added to the Linux kernel starting from version 6.7. While we’ve already seen how easy it is to create a new file system using a single device, encrypt and/or compress it and that check summing of meta data and user data is enabled by default, there is much more you can do with bcachefs. In this post we’ll look at how you can work with a file system that spans multiple devices, which is quite common in today’s infrastructures.

When we looked at the devices available to the system in the last post, it looked like this:

tumbleweed:~ $ lsblk | grep -w "4G"

└─vda3 254:3 0 1.4G 0 part [SWAP]

vdb 254:16 0 4G 0 disk

vdc 254:32 0 4G 0 disk

vdd 254:48 0 4G 0 disk

vde 254:64 0 4G 0 disk

vdf 254:80 0 4G 0 disk

vdg 254:96 0 4G 0 disk

This means we have six unused block devices to play with. Lets start again with the most simple case, one device, one file system:

tumbleweed:~ $ bcachefs format --force /dev/vdb

tumbleweed:~ $ mount /dev/vdb /mnt/dummy/

tumbleweed:~ $ df -h | grep dummy

/dev/vdb 3.7G 2.0M 3.6G 1% /mnt/dummy

Assuming we’re running out of space on that file system and we want to add another device, how does work?

tumbleweed:~ $ bcachefs device add /mnt/dummy/ /dev/vdc

tumbleweed:~ $ df -h | grep dummy

/dev/vdb:/dev/vdc 7.3G 2.0M 7.2G 1% /mnt/dummy

Quite easy, and no separate step required to extend the file system, this was done automatically which is quite nice. You can even go a step further and specify how large the file system should be on the new device (which doesn’t make much sense in this case):

tumbleweed:~ $ bcachefs device add --fs_size=4G /mnt/dummy/ /dev/vdd

tumbleweed:~ $ df -h | grep mnt

/dev/vdb:/dev/vdc:/dev/vdd 11G 2.0M 11G 1% /mnt/dummy

Let’s remove this configuration and then create a file system with multiple devices right from the beginning:

tumbleweed:~ $ bcachefs format --force /dev/vdb /dev/vdc

Now we formatted two devices at once, which is great, but how can we mount that? This will obviously not work:

tumbleweed:~ $ mount /dev/vdb /dev/vdc /mnt/dummy/

mount: bad usage

Try 'mount --help' for more information.

The syntax is a bit different, so either do it it with “mount”:

tumbleweed:~ $ mount -t bcachefs /dev/vdb:/dev/vdc /mnt/dummy/

tumbleweed:~ $ df -h | grep dummy

/dev/vdb:/dev/vdc 7.3G 2.0M 7.2G 1% /mnt/dummy

… or use the “bcachefs” utility using the same syntax for the list of devices:

tumbleweed:~ $ umount /mnt/dummy

tumbleweed:~ $ bcachefs mount /dev/vdb:/dev/vdc /mnt/dummy/

tumbleweed:~ $ df -h | grep dummy

/dev/vdb:/dev/vdc 7.3G 2.0M 7.2G 1% /mnt/dummy

What is a bit annoying is, that you need to know which devices you can still add, as you won’t see this in the “lsblk” output”:

tumbleweed:~ $ lsblk | grep -w "4G"

└─vda3 254:3 0 1.4G 0 part [SWAP]

vdb 254:16 0 4G 0 disk /mnt/dummy

vdc 254:32 0 4G 0 disk

vdd 254:48 0 4G 0 disk

vde 254:64 0 4G 0 disk

vdf 254:80 0 4G 0 disk

vdg 254:96 0 4G 0 disk

You do see it, however in the “df -h” output:

tumbleweed:~ $ df -h | grep dummy

/dev/vdb:/dev/vdc 7.3G 2.0M 7.2G 1% /mnt/dummy

Another way to get those details is once more to use the “bcachefs” utility:

tumbleweed:~ $ bcachefs fs usage /mnt/dummy/

Filesystem: d6f85f8f-dc12-4e83-8547-6fa8312c8eca

Size: 7902739968

Used: 76021760

Online reserved: 0

Data type Required/total Durability Devices

btree: 1/1 1 [vdb] 1048576

btree: 1/1 1 [vdc] 1048576

(no label) (device 0): vdb rw

data buckets fragmented

free: 4256956416 16239

sb: 3149824 13 258048

journal: 33554432 128

btree: 1048576 4

user: 0 0

cached: 0 0

parity: 0 0

stripe: 0 0

need_gc_gens: 0 0

need_discard: 0 0

capacity: 4294967296 16384

(no label) (device 1): vdc rw

data buckets fragmented

free: 4256956416 16239

sb: 3149824 13 258048

journal: 33554432 128

btree: 1048576 4

user: 0 0

cached: 0 0

parity: 0 0

stripe: 0 0

need_gc_gens: 0 0

need_discard: 0 0

capacity: 4294967296 16384

Note that shrinking a file system on a device is currently not supported, only growing.

In the next post we’ll look at how you can mirror your data across multiple devices.

L’article Another file system for Linux: bcachefs (2) – multi device file systems est apparu en premier sur dbi Blog.

Another file system for Linux: bcachefs (1) – basics

When Linux 6.7 (already end of life) was released some time ago another file system made it into the kernel: bcachefs. This is another copy on write file system like ZFS or Btrfs. The goal of this post is not to compare those in regards to features and performance, but just to give you the necessary bits to get started with it. If you want to try this out for yourself, you obviously need at least version 6.7 of the Linux kernel. You can either build it yourself or you can use the distribution of your choice which ships at least with kernel 6.7 as an option. I’ll be using openSUSE Tumbleweed as this is a rolling release and new kernel versions make it into the distribution quite fast after they’ve been released.

When you install Tumbleweed as of today, you’ll get a 6.8 kernel which is fine if you want to play around with bcachefs:

tumbleweed:~ $ uname -a

Linux tumbleweed 6.8.5-1-default #1 SMP PREEMPT_DYNAMIC Thu Apr 11 04:31:19 UTC 2024 (542f698) x86_64 x86_64 x86_64 GNU/Linux

Let’s start very simple: Once device, on file system. Usually you create a new file system with the mkfs command, but you’ll quickly notice that there is nothing for bcachefs:

tumbleweed:~ $ mkfs.[TAB][TAB]

mkfs.bfs mkfs.btrfs mkfs.cramfs mkfs.ext2 mkfs.ext3 mkfs.ext4 mkfs.fat mkfs.minix mkfs.msdos mkfs.ntfs mkfs.vfat

By default there is also no command which starts with “bca”:

tumbleweed:~ # bca[TAB][TAB]

The utilities you need to get started need to be installed on Tumbleweed:

tumbleweed:~ $ zypper se bcachefs

Loading repository data...

Reading installed packages...

S | Name | Summary | Type

--+----------------+--------------------------------------+--------

| bcachefs-tools | Configuration utilities for bcachefs | package

tumbleweed:~ $ zypper in -y bcachefs-tools

Loading repository data...

Reading installed packages...

Resolving package dependencies...

The following 2 NEW packages are going to be installed:

bcachefs-tools libsodium23

2 new packages to install.

Overall download size: 1.4 MiB. Already cached: 0 B. After the operation, additional 3.6 MiB will be used.

Backend: classic_rpmtrans

Continue? [y/n/v/...? shows all options] (y): y

Retrieving: libsodium23-1.0.18-2.16.x86_64 (Main Repository (OSS)) (1/2), 169.7 KiB

Retrieving: libsodium23-1.0.18-2.16.x86_64.rpm ...........................................................................................................[done (173.6 KiB/s)]

Retrieving: bcachefs-tools-1.6.4-1.2.x86_64 (Main Repository (OSS)) (2/2), 1.2 MiB

Retrieving: bcachefs-tools-1.6.4-1.2.x86_64.rpm ............................................................................................................[done (5.4 MiB/s)]

Checking for file conflicts: ...........................................................................................................................................[done]

(1/2) Installing: libsodium23-1.0.18-2.16.x86_64 .......................................................................................................................[done]

(2/2) Installing: bcachefs-tools-1.6.4-1.2.x86_64 ......................................................................................................................[done]

Running post-transaction scripts .......................................................................................................................................[done]

This will give you “mkfs.bcachefs” and all the other utilities you’ll need to play with it.

I’ve prepared six small devices I can play with:

tumbleweed:~ $ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sr0 11:0 1 276M 0 rom

vda 254:0 0 20G 0 disk

├─vda1 254:1 0 8M 0 part

├─vda2 254:2 0 18.6G 0 part /var

│ /srv

│ /usr/local

│ /opt

│ /root

│ /home

│ /boot/grub2/x86_64-efi

│ /boot/grub2/i386-pc

│ /.snapshots

│ /

└─vda3 254:3 0 1.4G 0 part [SWAP]

vdb 254:16 0 4G 0 disk

vdc 254:32 0 4G 0 disk

vdd 254:48 0 4G 0 disk

vde 254:64 0 4G 0 disk

vdf 254:80 0 4G 0 disk

vdg 254:96 0 4G 0 disk

In the most simple form (one device, one file system) you might start like this:

tumbleweed:~ $ bcachefs format /dev/vdb

External UUID: 127933ff-575b-484f-9eab-d0bf5dbf52b2

Internal UUID: fbf59149-3dc4-4871-bfb5-8fb910d0529f

Magic number: c68573f6-66ce-90a9-d96a-60cf803df7ef

Device index: 0

Label:

Version: 1.6: btree_subvolume_children

Version upgrade complete: 0.0: (unknown version)

Oldest version on disk: 1.6: btree_subvolume_children

Created: Wed Apr 17 10:39:58 2024

Sequence number: 0

Time of last write: Thu Jan 1 01:00:00 1970

Superblock size: 960 B/1.00 MiB

Clean: 0

Devices: 1

Sections: members_v1,members_v2

Features: new_siphash,new_extent_overwrite,btree_ptr_v2,extents_above_btree_updates,btree_updates_journalled,new_varint,journal_no_flush,alloc_v2,extents_across_btree_nodes

Compat features:

Options:

block_size: 512 B

btree_node_size: 256 KiB

errors: continue [ro] panic

metadata_replicas: 1

data_replicas: 1

metadata_replicas_required: 1

data_replicas_required: 1

encoded_extent_max: 64.0 KiB

metadata_checksum: none [crc32c] crc64 xxhash

data_checksum: none [crc32c] crc64 xxhash

compression: none

background_compression: none

str_hash: crc32c crc64 [siphash]

metadata_target: none

foreground_target: none

background_target: none

promote_target: none

erasure_code: 0

inodes_32bit: 1

shard_inode_numbers: 1

inodes_use_key_cache: 1

gc_reserve_percent: 8

gc_reserve_bytes: 0 B

root_reserve_percent: 0

wide_macs: 0

acl: 1

usrquota: 0

grpquota: 0

prjquota: 0

journal_flush_delay: 1000

journal_flush_disabled: 0

journal_reclaim_delay: 100

journal_transaction_names: 1

version_upgrade: [compatible] incompatible none

nocow: 0

members_v2 (size 144):

Device: 0

Label: (none)

UUID: bb28c803-621a-4007-af13-a9218808de8f

Size: 4.00 GiB

read errors: 0

write errors: 0

checksum errors: 0

seqread iops: 0

seqwrite iops: 0

randread iops: 0

randwrite iops: 0

Bucket size: 256 KiB

First bucket: 0

Buckets: 16384

Last mount: (never)

Last superblock write: 0

State: rw

Data allowed: journal,btree,user

Has data: (none)

Durability: 1

Discard: 0

Freespace initialized: 0

mounting version 1.6: btree_subvolume_children

initializing new filesystem

going read-write

initializing freespace

This is already ready to mount and we have our first bcachfs file system:

tumbleweed:~ $ mkdir /mnt/dummy

tumbleweed:~ $ mount /dev/vdb /mnt/dummy

tumbleweed:~ $ df -h | grep dummy

/dev/vdb 3.7G 2.0M 3.6G 1% /mnt/dummy

If you need encryption, this is supported as well and obviously is asking you for a passphrase when you format the device:

tumbleweed:~ $ umount /mnt/dummy

tumbleweed:~ $ bcachefs format --encrypted --force /dev/vdb

Enter passphrase:

Enter same passphrase again:

/dev/vdb contains a bcachefs filesystem

External UUID: aa0a4742-46ed-4228-a590-62b8e2de7633

Internal UUID: 800b2306-3900-47fb-9a42-2f7e75baec99

Magic number: c68573f6-66ce-90a9-d96a-60cf803df7ef

Device index: 0

Label:

Version: 1.4: member_seq

Version upgrade complete: 0.0: (unknown version)

Oldest version on disk: 1.4: member_seq

Created: Wed Apr 17 10:46:06 2024

Sequence number: 0

Time of last write: Thu Jan 1 01:00:00 1970

Superblock size: 1.00 KiB/1.00 MiB

Clean: 0

Devices: 1

Sections: members_v1,crypt,members_v2

Features:

Compat features:

Options:

block_size: 512 B

btree_node_size: 256 KiB

errors: continue [ro] panic

metadata_replicas: 1

data_replicas: 1

metadata_replicas_required: 1

data_replicas_required: 1

encoded_extent_max: 64.0 KiB

metadata_checksum: none [crc32c] crc64 xxhash

data_checksum: none [crc32c] crc64 xxhash

compression: none

background_compression: none

str_hash: crc32c crc64 [siphash]

metadata_target: none

foreground_target: none

background_target: none

promote_target: none

erasure_code: 0

inodes_32bit: 1

shard_inode_numbers: 1

inodes_use_key_cache: 1

gc_reserve_percent: 8

gc_reserve_bytes: 0 B

root_reserve_percent: 0

wide_macs: 0

acl: 1

usrquota: 0

grpquota: 0

prjquota: 0

journal_flush_delay: 1000

journal_flush_disabled: 0

journal_reclaim_delay: 100

journal_transaction_names: 1

version_upgrade: [compatible] incompatible none

nocow: 0

members_v2 (size 144):

Device: 0

Label: (none)

UUID: 60de61d2-391b-4605-b0da-5f593b7c703f

Size: 4.00 GiB

read errors: 0

write errors: 0

checksum errors: 0

seqread iops: 0

seqwrite iops: 0

randread iops: 0

randwrite iops: 0

Bucket size: 256 KiB

First bucket: 0

Buckets: 16384

Last mount: (never)

Last superblock write: 0

State: rw

Data allowed: journal,btree,user

Has data: (none)

Durability: 1

Discard: 0

Freespace initialized: 0

To mount this you’ll need to specify the passphrase given above or it will fail:

tumbleweed:~ $ mount /dev/vdb /mnt/dummy/

Enter passphrase:

ERROR - bcachefs::commands::cmd_mount: Fatal error: failed to verify the password

tumbleweed:~ $ mount /dev/vdb /mnt/dummy/

Enter passphrase:

tumbleweed:~ $ df -h | grep dummy

/dev/vdb 3.7G 2.0M 3.6G 1% /mnt/dummy

tumbleweed:~ $ umount /mnt/dummy

Beside encryption you may also use compression (supported are gzip, lz4 and zstd), e.g.:

tumbleweed:~ $ bcachefs format --compression=lz4 --force /dev/vdb

/dev/vdb contains a bcachefs filesystem

External UUID: 1ebcfe14-7d6a-43b1-8d48-47bcef0e7021

Internal UUID: 5117240c-95f1-4c2a-bed4-afb4c4fbb83c

Magic number: c68573f6-66ce-90a9-d96a-60cf803df7ef

Device index: 0

Label:

Version: 1.4: member_seq

Version upgrade complete: 0.0: (unknown version)

Oldest version on disk: 1.4: member_seq

Created: Wed Apr 17 10:54:02 2024

Sequence number: 0

Time of last write: Thu Jan 1 01:00:00 1970

Superblock size: 960 B/1.00 MiB

Clean: 0

Devices: 1

Sections: members_v1,members_v2

Features:

Compat features:

Options:

block_size: 512 B

btree_node_size: 256 KiB

errors: continue [ro] panic

metadata_replicas: 1

data_replicas: 1

metadata_replicas_required: 1

data_replicas_required: 1

encoded_extent_max: 64.0 KiB

metadata_checksum: none [crc32c] crc64 xxhash

data_checksum: none [crc32c] crc64 xxhash

compression: lz4

background_compression: none

str_hash: crc32c crc64 [siphash]

metadata_target: none

foreground_target: none

background_target: none

promote_target: none

erasure_code: 0

inodes_32bit: 1

shard_inode_numbers: 1

inodes_use_key_cache: 1

gc_reserve_percent: 8

gc_reserve_bytes: 0 B

root_reserve_percent: 0

wide_macs: 0

acl: 1

usrquota: 0

grpquota: 0

prjquota: 0

journal_flush_delay: 1000

journal_flush_disabled: 0

journal_reclaim_delay: 100

journal_transaction_names: 1

version_upgrade: [compatible] incompatible none

nocow: 0

members_v2 (size 144):

Device: 0

Label: (none)

UUID: 3bae44f0-3cd4-4418-8556-4342e74c22d1

Size: 4.00 GiB

read errors: 0

write errors: 0

checksum errors: 0

seqread iops: 0

seqwrite iops: 0

randread iops: 0

randwrite iops: 0

Bucket size: 256 KiB

First bucket: 0

Buckets: 16384

Last mount: (never)

Last superblock write: 0

State: rw

Data allowed: journal,btree,user

Has data: (none)

Durability: 1

Discard: 0

Freespace initialized: 0

tumbleweed:~ $ mount /dev/vdb /mnt/dummy/

tumbleweed:~ $ df -h | grep dummy

/dev/vdb 3.7G 2.0M 3.6G 1% /mnt/dummy

Meta data and data check sums are enabled by default:

tumbleweed:~ $ bcachefs show-super -l /dev/vdb | grep -i check

metadata_checksum: none [crc32c] crc64 xxhash

data_checksum: none [crc32c] crc64 xxhash

checksum errors: 0

That’s it for the very basics. In the next post we’ll look at multi device file systems.

L’article Another file system for Linux: bcachefs (1) – basics est apparu en premier sur dbi Blog.

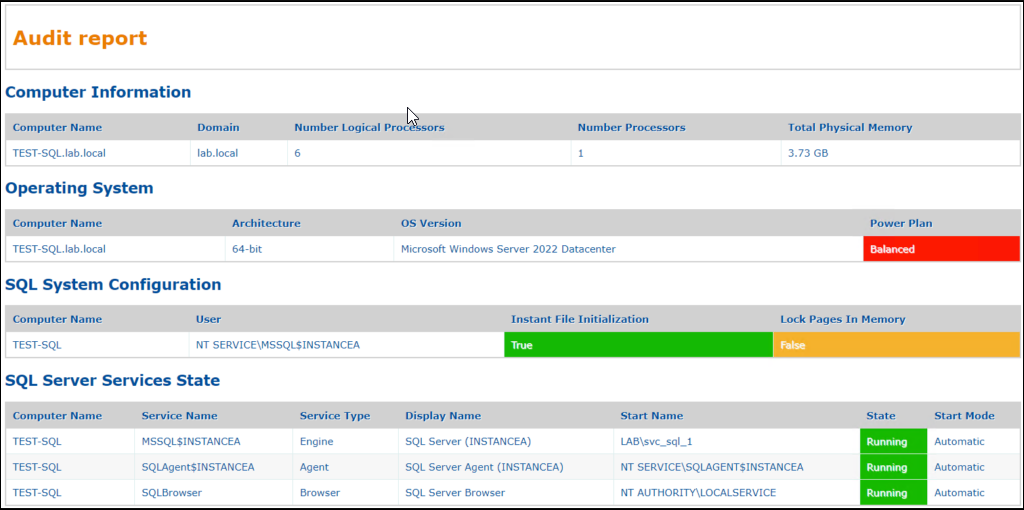

Build SQL Server audit reports with Powershell

When you are tasked with conducting an audit at a client’s site or on the environment you manage, you might find it necessary to automate the audit process in order to save time. However, it can be challenging to extract information from either the PowerShell console or a text file.

Here, the idea would be to propose a solution that could generate audit reports to quickly identify how the audited environment is configured. We will attempt to propose a solution that will automate the generation of audit reports.

In broad terms, here are what we will implement:

- Define the environment we wish to audit. We centralize the configuration of our environments and all the parameters we will use.

- Define the checks or tests we would like to perform.

- Execute these checks. In our case, we will mostly use dbatools to perform the checks. However, it’s possible that you may not be able to use dbatools in your environment for security reasons, for example. In that case, you could replace calls to dbatools with calls to PowerShell functions.

- Produce an audit report.

Here are the technologies we will use in our project :

- SQL Server

- Powershell

- Windows Server

- XML, XSLT and JSON

In our example, we use the dbatools module in oder to get some information related to the environment(s) we audit.

Reference : https://dbatools.io/

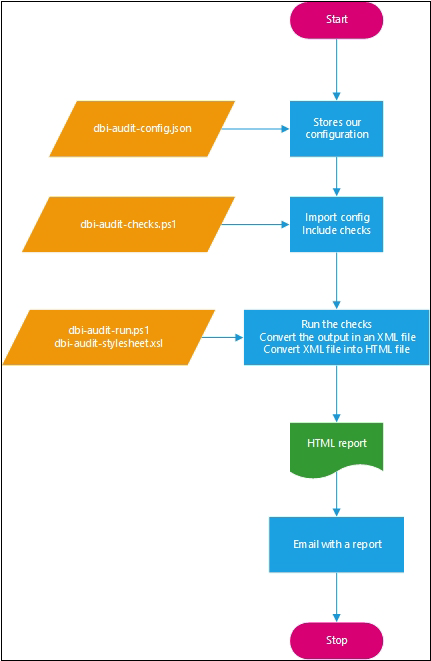

Global architectureHere is how our solution will work :

- We store the configuration of our environment in a JSON file. This avoids storing certain parameters in the PowerShell code.

- We import our configuration (our JSON file). We centralize in one file all the checks, tests to be performed. The configuration stored in the JSON file is passed to the tests.

- We execute all the tests to be performed, then we generate an HTML file (applying an XSLT style sheet) from the collected information.

- We can then send this information by email (for example).

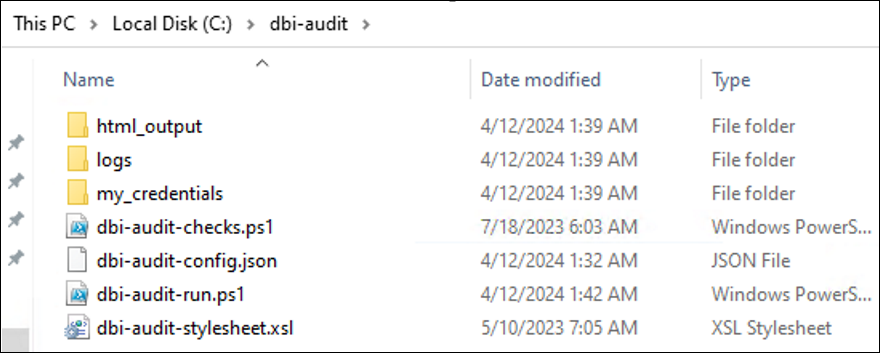

Here are some details about the structure of our project :

FolderTypeFileDescriptionDetailsdbi-auditPS1 filedbi-audit-config.jsonContains some pieces of information related to the environment you would like to audit.The file is called by the dbi-audit-checks.ps1. We import that file and parse it. E.g. if you need to add new servers to audit you can edit that file and run a new audit.dbi-auditPS1 filedbi-audit-checks.ps1Store the checks to perform on the environment(s).That file acts as a “library”, it contains all the checks to perform. It centralizes all the functions.dbi-auditPS1 filedbi-audit-run.ps1Run the checks to perform Transform the output in an html file.It’s the most import file :It runs the checks to perform.

It builds the html report and apply a stylesheet

It can also send by email the related reportdbi-auditXSL filedbi-audit-stylesheet.xslContains the stylesheet to apply to the HTML report.It’s where you will define what you HTML report will look like.html_outputFolder–Will contain the report audit produced.It stores HTML reports.

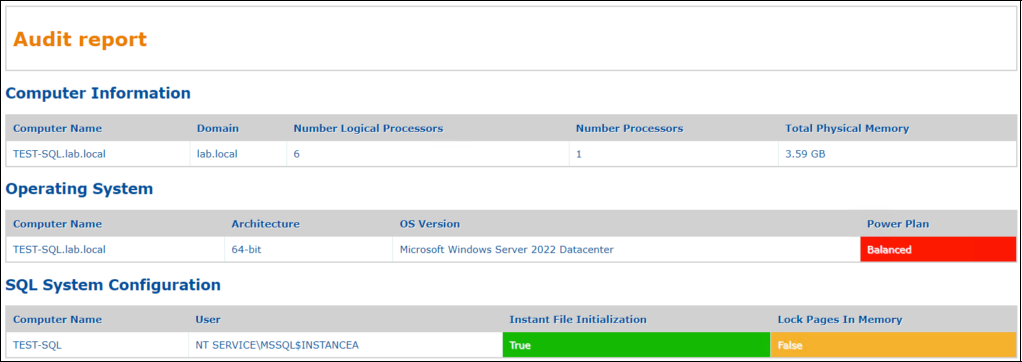

What does it look like ?

How does it work ?

Implementation

Implementation

Code – A basic implementation :

dbi-audit-config.json :

[

{

"Name": "dbi-app.computername",

"Value": [

"TEST-SQL"

],

"Description": "Windows servers list to audit"

},

{

"Name": "dbi-app.sqlinstance",

"Value": [

"TEST-SQL\\INSTANCEA"

],

"Description": "SQL Server list to audit"

},

{

"Name": "dbi-app.checkcomputersinformation.enabled",

"Value": "True",

"Description": "Get some information on OS level"

},

{

"Name": "dbi-app.checkoperatingsystem.enabled",

"Value": "True",

"Description": "Perform some OS checks"

},

{

"Name": "dbi-app.checksqlsystemconfiguration.enabled",

"Value": "True",

"Description": "Check some SQL Server system settings"

}

]dbi-audit-checks.ps1 :

#We import our configuration

$AuditConfig = [PSCustomObject](Get-Content .\dbi-audit-config.json | Out-String | ConvertFrom-Json)

#We retrieve the values contained in our json file. Each value is stored in a variable

$Computers = $AuditConfig | Where-Object { $_.Name -eq 'dbi-app.computername' } | Select-Object Value

$SQLInstances = $AuditConfig | Where-Object { $_.Name -eq 'dbi-app.sqlinstance' } | Select-Object Value

$UnitFileSize = ($AuditConfig | Where-Object { $_.Name -eq 'app.unitfilesize' } | Select-Object Value).Value

#Our configuration file allow to enable or disable some checks. We also retrieve those values.

$EnableCheckComputersInformation = ($AuditConfig | Where-Object { $_.Name -eq 'dbi-app.checkcomputersinformation.enabled' } | Select-Object Value).Value

$EnableCheckOperatingSystem = ($AuditConfig | Where-Object { $_.Name -eq 'dbi-app.checkoperatingsystem.enabled' } | Select-Object Value).Value

$EnableCheckSQLSystemConfiguration = ($AuditConfig | Where-Object { $_.Name -eq 'dbi-app.checksqlsystemconfiguration.enabled' } | Select-Object Value).Value

#Used to invoke command queries

$ComputersList = @()

$ComputersList += $Computers | Foreach-Object {

$_.Value

}

<#

Get Computer Information

#>

function CheckComputersInformation()

{

if ($EnableCheckComputersInformation)

{

$ComputersInformationList = @()

$ComputersInformationList += $Computers | Foreach-Object {

Get-DbaComputerSystem -ComputerName $_.Value |

Select-Object ComputerName, Domain, NumberLogicalProcessors,

NumberProcessors, TotalPhysicalMemory

}

}

return $ComputersInformationList

}

<#

Get OS Information

#>

function CheckOperatingSystem()

{

if ($EnableCheckOperatingSystem)

{

$OperatingSystemList = @()

$OperatingSystemList += $Computers | Foreach-Object {

Get-DbaOperatingSystem -ComputerName $_.Value |

Select-Object ComputerName, Architecture, OSVersion, ActivePowerPlan

}

}

return $OperatingSystemList

}

<#

Get SQL Server/OS Configuration : IFI, LockPagesInMemory

#>

function CheckSQLSystemConfiguration()

{

if ($EnableCheckSQLSystemConfiguration)

{

$SQLSystemConfigurationList = @()

$SQLSystemConfigurationList += $Computers | Foreach-Object {

$ComputerName = $_.Value

Get-DbaPrivilege -ComputerName $ComputerName |

Where-Object { $_.User -like '*MSSQL*' } |

Select-Object ComputerName, User, InstantFileInitialization, LockPagesInMemory

$ComputerName = $Null

}

}

return $SQLSystemConfigurationList

}

dbi-audit-run.ps1 :

#Our configuration file will accept a parameter. It's the stylesheet to apply to our HTML report

Param(

[parameter(mandatory=$true)][string]$XSLStyleSheet

)

# We import the checks to run

. .\dbi-audit-checks.ps1

#Setup the XML configuration

$ScriptLocation = Get-Location

$XslOutputPath = "$($ScriptLocation.Path)\$($XSLStyleSheet)"

$FileSavePath = "$($ScriptLocation.Path)\html_output"

[System.XML.XMLDocument]$XmlOutput = New-Object System.XML.XMLDocument

[System.XML.XMLElement]$XmlRoot = $XmlOutput.CreateElement("DbiAuditReport")

$Null = $XmlOutput.appendChild($XmlRoot)

#We run the checks. Instead of manually call all the checks, we store them in array

#We browse that array and we execute the related function

#Each function result is used and append to the XML structure we build

$FunctionsName = @("CheckComputersInformation", "CheckOperatingSystem", "CheckSQLSystemConfiguration")

$FunctionsStore = [ordered] @{}

$FunctionsStore['ComputersInformation'] = CheckComputersInformation

$FunctionsStore['OperatingSystem'] = CheckOperatingSystem

$FunctionsStore['SQLSystemConfiguration'] = CheckSQLSystemConfiguration

$i = 0

$FunctionsStore.Keys | ForEach-Object {

[System.XML.XMLElement]$xmlSQLChecks = $XmlRoot.appendChild($XmlOutput.CreateElement($FunctionsName[$i]))

$Results = $FunctionsStore[$_]

foreach ($Data in $Results)

{

$xmlServicesEntry = $xmlSQLChecks.appendChild($XmlOutput.CreateElement($_))

foreach ($DataProperties in $Data.PSObject.Properties)

{

$xmlServicesEntry.SetAttribute($DataProperties.Name, $DataProperties.Value)

}

}

$i++

}

#We create our XML file

$XmlRoot.SetAttribute("EndTime", (Get-Date -Format yyyy-MM-dd_h-mm))

$ReportXMLFileName = [string]::Format("{0}\{1}_DbiAuditReport.xml", $FileSavePath, (Get-Date).tostring("MM-dd-yyyy_HH-mm-ss"))

$ReportHTMLFileName = [string]::Format("{0}\{1}_DbiAuditReport.html", $FileSavePath, (Get-Date).tostring("MM-dd-yyyy_HH-mm-ss"))

$XmlOutput.Save($ReportXMLFileName)

#We apply our XSLT stylesheet

[System.Xml.Xsl.XslCompiledTransform]$XSLT = New-Object System.Xml.Xsl.XslCompiledTransform

$XSLT.Load($XslOutputPath)

#We build our HTML file

$XSLT.Transform($ReportXMLFileName, $ReportHTMLFileName)dbi-audit-stylesheet.xsl :

<xsl:stylesheet xmlns:xsl="http://www.w3.org/1999/XSL/Transform" version="1.0">

<xsl:template match="DbiAuditReport">

<xsl:text disable-output-escaping='yes'><!DOCTYPE html></xsl:text>

<html>

<head>

<meta http-equiv="X-UA-Compatible" content="IE=9" />

<style>

body {

font-family: Verdana, sans-serif;

font-size: 15px;

line-height: 1.5M background-color: #FCFCFC;

}

h1 {

color: #EB7D00;

font-size: 30px;

}

h2 {

color: #004F9C;

margin-left: 2.5%;

}

h3 {

font-size: 24px;

}

table {

width: 95%;

margin: auto;

border: solid 2px #D1D1D1;

border-collapse: collapse;

border-spacing: 0;

margin-bottom: 1%;

}

table tr th {

background-color: #D1D1D1;

border: solid 1px #D1D1D1;

color: #004F9C;

padding: 10px;

text-align: left;

text-shadow: 1px 1px 1px #fff;

}

table td {

border: solid 1px #DDEEEE;

color: #004F9C;

padding: 10px;

text-shadow: 1px 1px 1px #fff;

}

table tr:nth-child(even) {

background: #F7F7F7

}

table tr:nth-child(odd) {

background: #FFFFFF

}

table tr .check_failed {

color: #F7F7F7;

background-color: #FC1703;

}

table tr .check_passed {

color: #F7F7F7;

background-color: #16BA00;

}

table tr .check_in_between {

color: #F7F7F7;

background-color: #F5B22C;

}

</style>

</head>

<body>

<table>

<tr>

<td>

<h1>Audit report</h1> </td>

</tr>

</table>

<caption>

<xsl:apply-templates select="CheckComputersInformation" />

<xsl:apply-templates select="CheckOperatingSystem" />

<xsl:apply-templates select="CheckSQLSystemConfiguration" /> </caption>

</body>

</html>

</xsl:template>

<xsl:template match="CheckComputersInformation">

<h2>Computer Information</h2>

<table>

<tr>

<th>Computer Name</th>

<th>Domain</th>

<th>Number Logical Processors</th>

<th>Number Processors</th>

<th>Total Physical Memory</th>

</tr>

<tbody>

<xsl:apply-templates select="ComputersInformation" /> </tbody>

</table>

</xsl:template>

<xsl:template match="ComputersInformation">

<tr>

<td>

<xsl:value-of select="@ComputerName" />

</td>

<td>

<xsl:value-of select="@Domain" />

</td>

<td>

<xsl:value-of select="@NumberLogicalProcessors" />

</td>

<td>

<xsl:value-of select="@NumberProcessors" />

</td>

<td>

<xsl:value-of select="@TotalPhysicalMemory" />

</td>

</tr>

</xsl:template>

<xsl:template match="CheckOperatingSystem">

<h2>Operating System</h2>

<table>

<tr>

<th>Computer Name</th>

<th>Architecture</th>

<th>OS Version</th>

<th>Power Plan</th>

</tr>

<tbody>

<xsl:apply-templates select="OperatingSystem" /> </tbody>

</table>

</xsl:template>

<xsl:template match="OperatingSystem">

<tr>

<td>

<xsl:value-of select="@ComputerName" />

</td>

<td>

<xsl:value-of select="@Architecture" />

</td>

<td>

<xsl:value-of select="@OSVersion" />

</td>

<xsl:choose>

<xsl:when test="@ActivePowerPlan = 'High performance'">

<td class="check_passed">

<xsl:value-of select="@ActivePowerPlan" />

</td>

</xsl:when>

<xsl:otherwise>

<td class="check_failed">

<xsl:value-of select="@ActivePowerPlan" />

</td>

</xsl:otherwise>

</xsl:choose>

</tr>

</xsl:template>

<xsl:template match="CheckSQLSystemConfiguration">

<h2>SQL System Configuration</h2>

<table>

<tr>

<th>Computer Name</th>

<th>User</th>

<th>Instant File Initialization</th>

<th>Lock Pages In Memory</th>

</tr>

<tbody>

<xsl:apply-templates select="SQLSystemConfiguration" /> </tbody>

</table>

</xsl:template>

<xsl:template match="SQLSystemConfiguration">

<tr>

<td>

<xsl:value-of select="@ComputerName" />

</td>

<td>

<xsl:value-of select="@User" />

</td>

<xsl:choose>

<xsl:when test="@InstantFileInitialization = 'True'">

<td class="check_passed">

<xsl:value-of select="@InstantFileInitialization" />

</td>

</xsl:when>

<xsl:otherwise>

<td class="check_failed">

<xsl:value-of select="@InstantFileInitialization" />

</td>

</xsl:otherwise>

</xsl:choose>

<xsl:choose>

<xsl:when test="@LockPagesInMemory = 'True'">

<td class="check_passed">

<xsl:value-of select="@LockPagesInMemory" />

</td>

</xsl:when>

<xsl:otherwise>

<td class="check_in_between">

<xsl:value-of select="@LockPagesInMemory" />

</td>

</xsl:otherwise>

</xsl:choose>

</tr>

</xsl:template>

</xsl:stylesheet>How does it run ?

.\dbi-audit-run.ps1 -XSLStyleSheet .\dbi-audit-stylesheet.xslOutput (what does it really look like ?) :

Nice to have

Nice to have

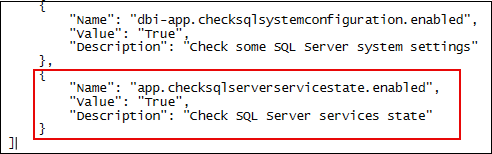

Let’s say I would like to add new checks. How would I proceed ?

- Edit the dbi-audit-checks.ps1

- Retrieve the information related to your check

$EnableCheckSQLServerServiceState = ($AuditConfig | Where-Object { $_.Name -eq 'app.checksqlserverservicestate.enabled' } | Select-Object Value).Value- Add another function

function CheckSQLServerServiceState()

{

if ($EnableCheckSQLServerServiceState -eq $True)

{

$SQLServerServiceStateList = @()

$SQLServerServiceStateList += $Computers | Foreach-Object {

Get-DbaService -ComputerName $_.Value |

Select-Object ComputerName, ServiceName, ServiceType, DisplayName, StartName, State, StartMode

}

}

return $SQLServerServiceStateList

}- Call it in the dbi-audit-run script

$FunctionsName = @("CheckComputersInformation", "CheckOperatingSystem", "CheckSQLSystemConfiguration", "CheckSQLServerServiceState")

$FunctionsStore = [ordered] @{}

$FunctionsStore['ComputersInformation'] = CheckComputersInformation

$FunctionsStore['OperatingSystem'] = CheckOperatingSystem

$FunctionsStore['SQLSystemConfiguration'] = CheckSQLSystemConfiguration

$FunctionsStore['SQLServerServiceState'] = CheckSQLServerServiceStateEdit the dbi-audit-stylesheet.xsl to include how you would like to display the information you collected (it’s the most consuming time part because it’s not automated. I did not find a way to automate it yet)

<body>

<table>

<tr>

<td>

<h1>Audit report</h1>

</td>

</tr>

</table>

<caption>

<xsl:apply-templates select="CheckComputersInformation"/>

<xsl:apply-templates select="CheckOperatingSystem"/>

<xsl:apply-templates select="CheckSQLSystemConfiguration"/>

<xsl:apply-templates select="CheckSQLServerServiceState"/>

</caption>

</body>

...

<xsl:template match="CheckSQLServerServiceState">

<h2>SQL Server Services State</h2>

<table>

<tr>

<th>Computer Name</th>

<th>Service Name</th>

<th>Service Type</th>

<th>Display Name</th>

<th>Start Name</th>

<th>State</th>

<th>Start Mode</th>

</tr>

<tbody>

<xsl:apply-templates select="SQLServerServiceState"/>

</tbody>

</table>

</xsl:template>

<xsl:template match="SQLServerServiceState">

<tr>

<td><xsl:value-of select="@ComputerName"/></td>

<td><xsl:value-of select="@ServiceName"/></td>

<td><xsl:value-of select="@ServiceType"/></td>

<td><xsl:value-of select="@DisplayName"/></td>

<td><xsl:value-of select="@StartName"/></td>

<xsl:choose>

<xsl:when test="(@State = 'Stopped') and (@ServiceType = 'Engine')">

<td class="check_failed"><xsl:value-of select="@State"/></td>

</xsl:when>

<xsl:when test="(@State = 'Stopped') and (@ServiceType = 'Agent')">

<td class="check_failed"><xsl:value-of select="@State"/></td>

</xsl:when>

<xsl:otherwise>

<td class="check_passed"><xsl:value-of select="@State"/></td>

</xsl:otherwise>

</xsl:choose>

<td><xsl:value-of select="@StartMode"/></td>

</tr>

</xsl:template>End result :

What about sending the report through email ?

- We could add a function that send an email with an attachment.

- Edit the dbi-audit-checks file

- Add a function Send-EmailWithAuditReport

- Add this piece of code to the function :

- Edit the dbi-audit-checks file

Send-MailMessage -SmtpServer mysmtpserver -From 'Sender' -To 'Recipient' -Subject 'Audit report' -Body 'Audit report' -Port 25 -Attachments $Attachments- Edit the dbi-audit-run.ps1

- Add a call to the Send-EmailWithAuditReport function :

Send-EmailWithAuditReport -Attachments $Attachments

- Add a call to the Send-EmailWithAuditReport function :

$ReportHTMLFileName = [string]::Format("{0}\{1}_DbiAuditReport.html", $FileSavePath, (Get-Date).tostring("MM-dd-yyyy_HH-mm-ss"))

...

SendEmailsWithReport -Attachments $ReportHTMLFileName

The main idea of this solution is to be able to use the same functions while applying a different rendering. To achieve this, you would need to change the XSL stylesheet or create another XSL stylesheet and then provide to the dbi-audit-run.ps1 file the stylesheet to apply.

This would allow having the same code to perform the following tasks:

- Audit

- Health check

- …

L’article Build SQL Server audit reports with Powershell est apparu en premier sur dbi Blog.

Rancher RKE2: Rancher roles for cluster autoscaler

The cluster autoscaler brings horizontal scaling into your cluster by deploying it into the cluster to autoscale. This is described in the following blog article https://www.dbi-services.com/blog/rancher-autoscaler-enable-rke2-node-autoscaling/. It didn’t emphasize much about the user and role configuration.

With Rancher, the cluster autoscaler uses a user’s API key. We will see how to configure minimal permissions by creating Rancher roles for cluster autoscaler.

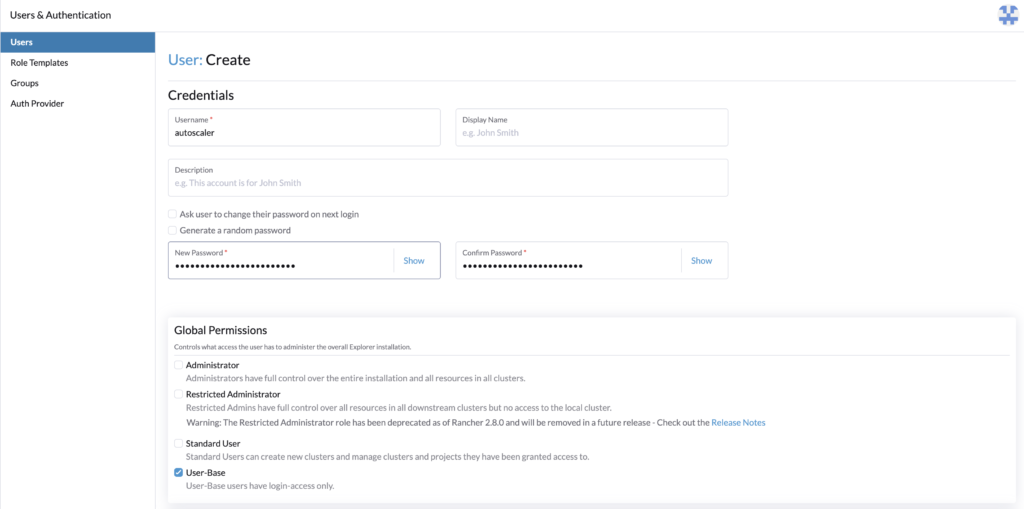

Rancher userFirst, let’s create the user that will communicate with Rancher, and whose token will be used. It will be given minimal access rights which is login access.

Go to Rancher > Users & Authentication > Users > Create.

- Set a username, for example, autoscaler

- Set the password

- Give User-Base permissions

- Create

The user is now created, let’s set Rancher roles with minimal permission for the cluster autoscaler.

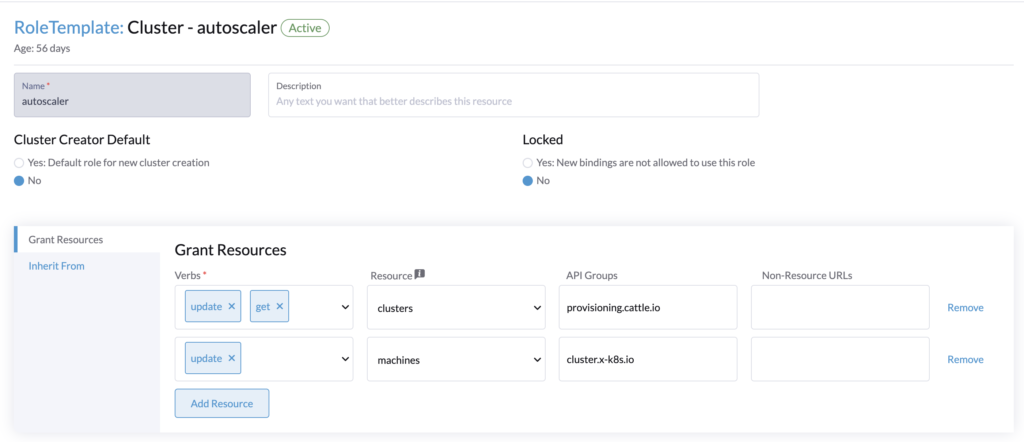

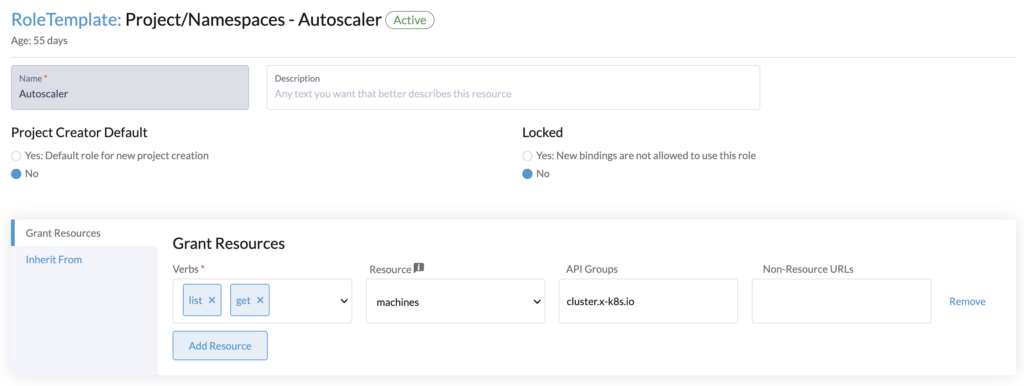

Rancher roles authorizationTo make the cluster autoscaler work, the user whose API key is provided needs the following roles:

- Cluster role (for the cluster to autoscale)

Get/Update for clusters.provisioning.cattle.io

Update of machines.cluster.x-k8s.io - Project role (for the namespace that contains the cluster resource (fleet-default))

Get/List of machines.cluster.x-k8s.io

Go to Rancher > Users & Authentication > Role Templates > Cluster > Create.

Create the cluster role. This role will be applied to every cluster that we want to autoscale.

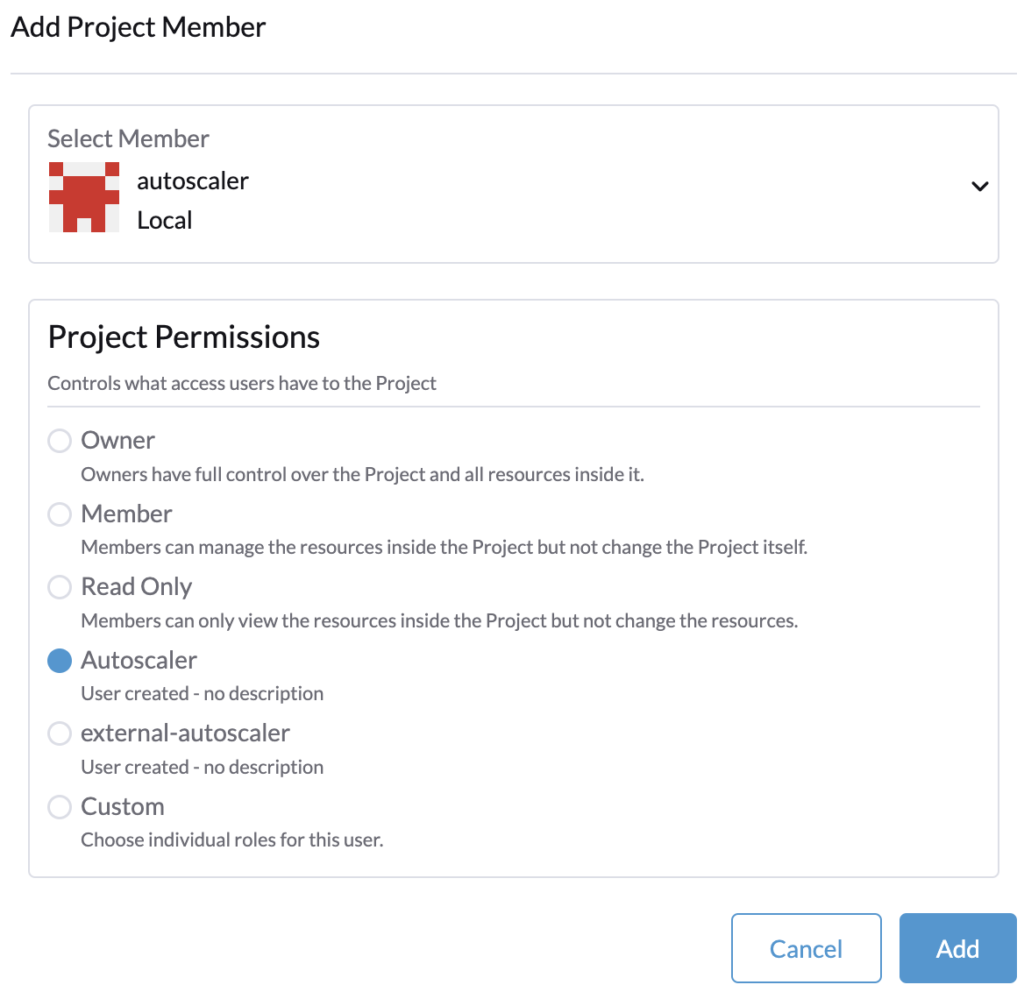

Then in Rancher > Users & Authentication > Role Templates > Project/Namespaces > Create.

Create the project role, it will be applied to the project of our local cluster (Rancher) that contains the namespace fleet-default.

Rancher roles assignment

Rancher roles assignment

The user and Rancher roles are created, let’s assign them.

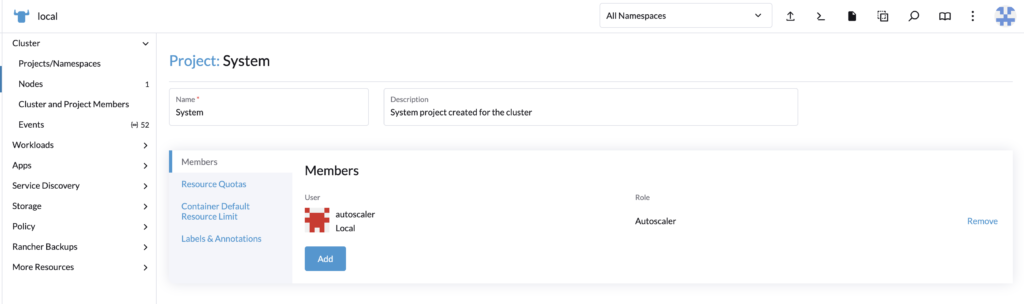

Project roleFirst, we will set the project role, this is to be done once.

Go to the local cluster (Rancher), in Cluster > Project/Namespace.

Search for the fleet-default namespace, by default it is contained in the project System.

Edit the project System and add the user with the project permissions created precedently.

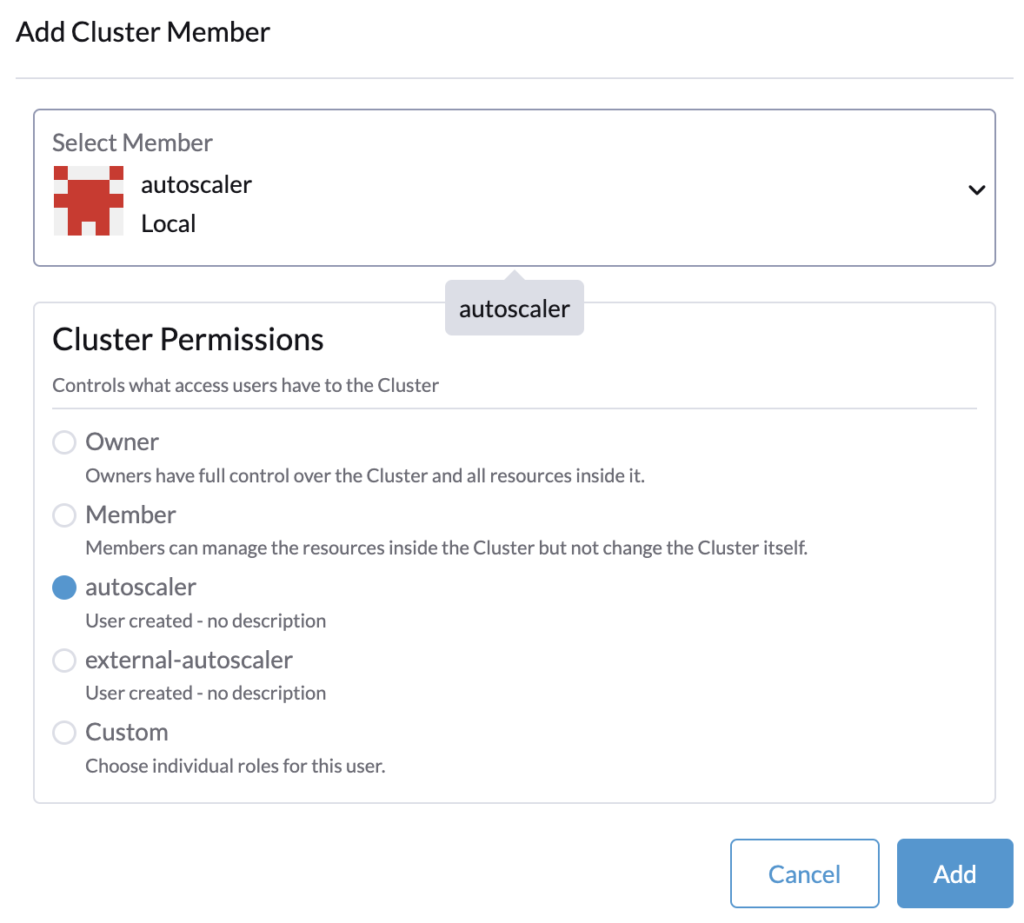

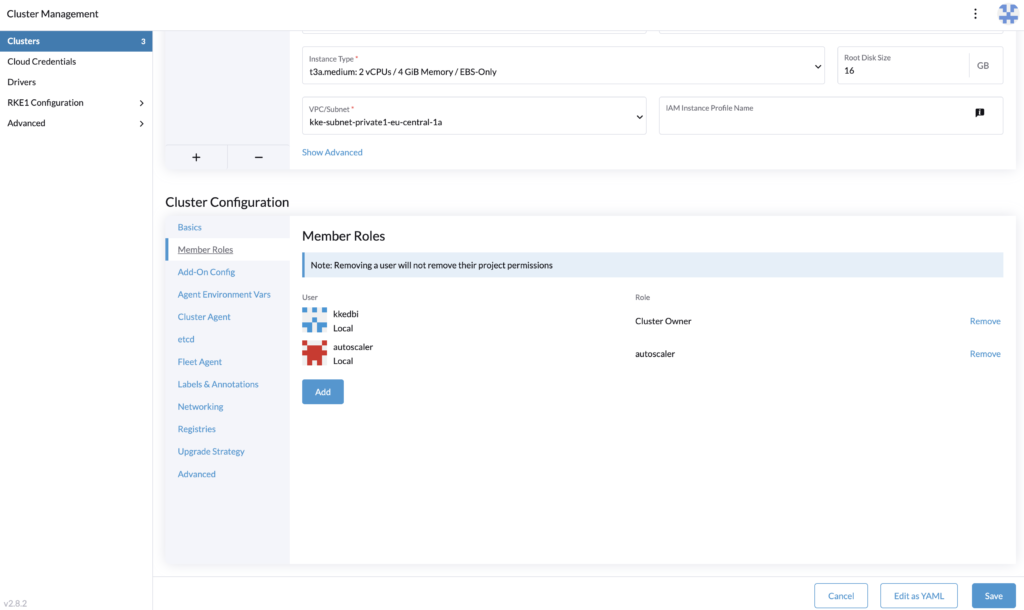

Cluster role

Cluster role

For each cluster where you will deploy the cluster autoscaler, you need to assign the user as a member with the cluster role.

In Rancher > Cluster Management, edit the cluster’s configuration and assign the user.

The roles assignment is done, let’s proceed to generate the token that is provided to the cluster autoscaler configuration.

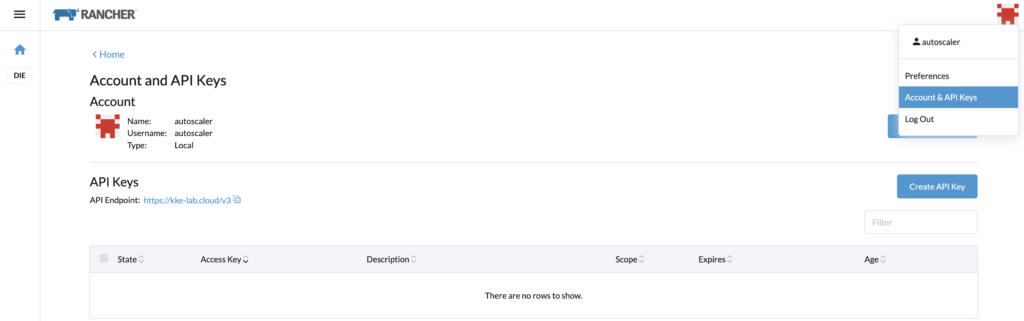

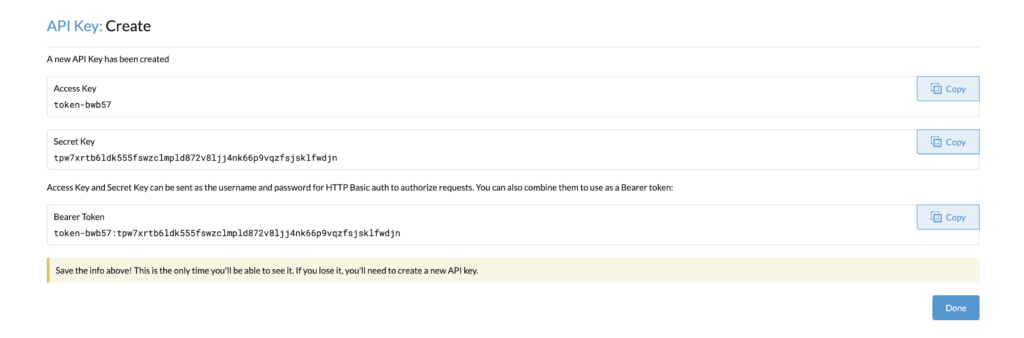

Rancher API keysLog in with the autoscaler user, and go to its profile > Account & API Keys.

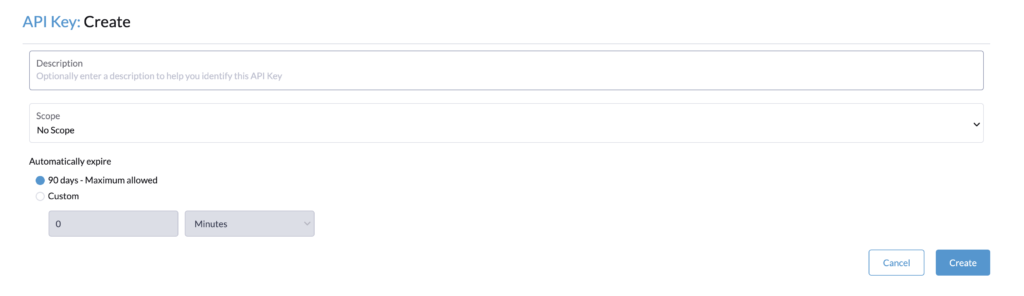

Let’s create an API Key for the cluster autoscaler configuration. Note that in a recent update of Rancher, the API keys expired by default in 90 days.

If you see this limitation, you can do the following steps to have no expiration.

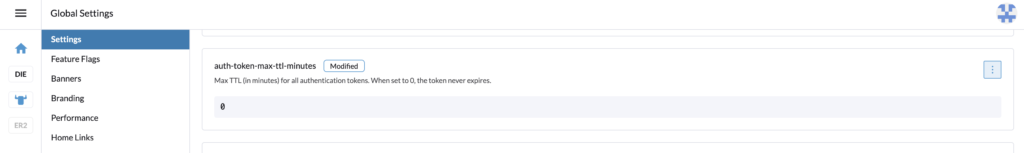

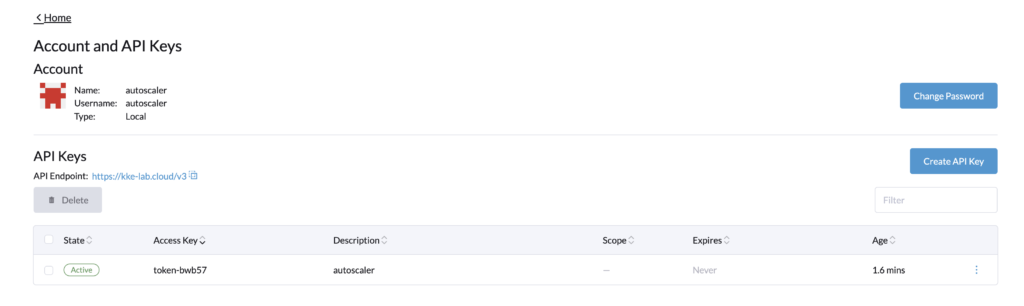

With the admin account, in Global settings > Settings, search for the setting auth-token-max-ttl-minutes and set it to 0.

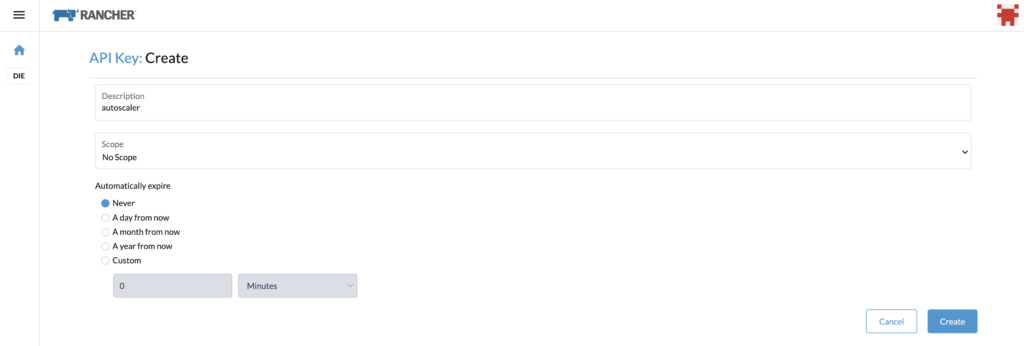

Go back with the autoscaler user and create the API Key, name it for example, autoscaler, and select “no scope”.

You can copy the Bearer Token, and use it for the cluster autoscaler configuration.

As seen above, the token never expires.

Let’s reset the parameter auth-token-max-ttl-minutes and use the default value button or the precedent value set.

We are now done with the roles configuration.

ConclusionThis blog article covers only a part of the setup for the cluster autoscaler for RKE2 provisioning. It explained the configuration of a Rancher user and Rancher’s roles with minimal permissions to enable the cluster autoscaler. It was made to complete this blog article https://www.dbi-services.com/blog/rancher-autoscaler-enable-rke2-node-autoscaling/ which covers the whole setup and deployment of the cluster autoscaler. Therefore if you are still wondering how to deploy and make the cluster autoscaler work, check the other blog.

LinksRancher official documentation: Rancher

RKE2 official documentation: RKE2

GitHub cluster autoscaler: https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler

Blog – Rancher autoscaler – Enable RKE2 node autoscaling

https://www.dbi-services.com/blog/rancher-autoscaler-enable-rke2-node-autoscaling

Blog – Reestablish administrator role access to Rancher users

https://www.dbi-services.com/blog/reestablish-administrator-role-access-to-rancher-users/

Blog – Introduction and RKE2 cluster template for AWS EC2

https://www.dbi-services.com/blog/rancher-rke2-cluster-templates-for-aws-ec2

Blog – Rancher RKE2 templates – Assign members to clusters

https://www.dbi-services.com/blog/rancher-rke2-templates-assign-members-to-clusters

L’article Rancher RKE2: Rancher roles for cluster autoscaler est apparu en premier sur dbi Blog.

Elasticsearch, Ingest Pipeline and Machine Learning

Elasticsearch has few interesting features around Machine Learning. While I was looking for data to import into Elasticsearch, I found interesting data sets from Airbnb especially reviews. I noticed that it does not contain any rate, but only comments.

To have sentiment of the a review, I would rather have an opinion on that review like:

- Negative

- Positive

- Neutral

For that matter, I found the cardiffnlp/twitter-roberta-base-sentiment-latest to suite my needs for my tests.

Import ModelElasticsearch provides the tool to import models from Hugging face into Elasticsearch itself: eland.

It is possible to install it or even use the pre-built docker image:

docker run -it --rm --network host docker.elastic.co/eland/eland

Let’s import the model:

eland_import_hub_model -u elastic -p 'password!' --hub-model-id cardiffnlp/twitter-roberta-base-sentiment-latest --task-type classification --url https://127.0.0.1:9200

After a minute, import completes:

2024-04-16 08:12:46,825 INFO : Model successfully imported with id 'cardiffnlp__twitter-roberta-base-sentiment-latest'

I can also check that it was imported successfully with the following API call:

GET _ml/trained_models/cardiffnlp__twitter-roberta-base-sentiment-latest

And result (extract):

{

"count": 1,

"trained_model_configs": [

{

"model_id": "cardiffnlp__twitter-roberta-base-sentiment-latest",

"model_type": "pytorch",

"created_by": "api_user",

"version": "12.0.0",

"create_time": 1713255117150,

...

"description": "Model cardiffnlp/twitter-roberta-base-sentiment-latest for task type 'text_classification'",

"tags": [],

...

},

"classification_labels": [

"negative",

"neutral",

"positive"

],

...

]

}

Next, model must be started:

POST _ml/trained_models/cardiffnlp__twitter-roberta-base-sentiment-latest/deployment/_start

This is subject to licensing. You might face this error “current license is non-compliant for [ml]“. For my tests, I used a trial.

I will use Filebeat to read review.csv file and ingest it into Elasticsearch. filebeat.yml looks like this:

filebeat.inputs:

- type: log

paths:

- 'C:\csv_inject\*.csv'

output.elasticsearch:

hosts: ["https://localhost:9200"]

protocol: "https"

username: "elastic"

password: "password!"

ssl:

ca_trusted_fingerprint: fakefp4076a4cf5c1111ac586bafa385exxxxfde0dfe3cd7771ed

indices:

- index: "csv"

pipeline: csv

So each time a new file gets into csv_inject folder, Filebeat will parse it and send it to my Elasticsearch setup within csv index.

PipelineIngest pipeline can perform basic transformation to incoming data before being indexed.

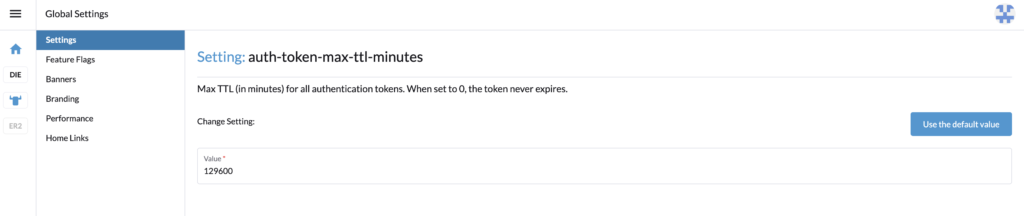

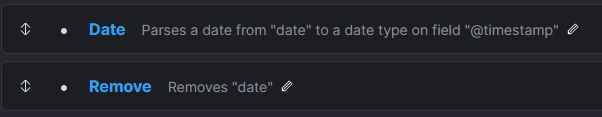

Data transformationFirst step consists of converting message field, which contains one line of data, into several target fields (ie. split csv). Next, remove message field. This looks like this in Processors section of the Ingest pipeline:

Next, I also want to replace the content of the default timestamp field (ie. @timestamp) with the timestamp of the review (and remove the date field after that):

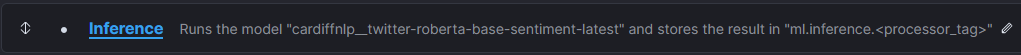

Inference

Inference

Now, I add the Inference step:

The only customization of that step is the field map as the default input field name is “text_field“, In the reviews, fields is named “comment“:

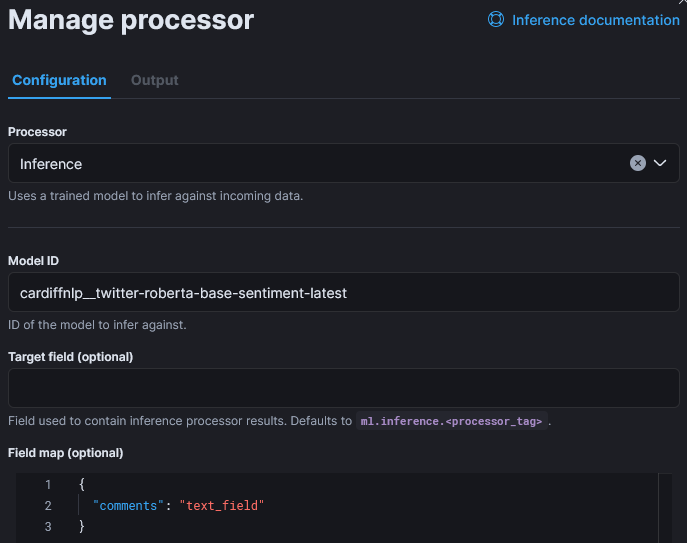

Optionally, but recommended, it is possible to add Failure processors which will set a field to keep track of the cause and will put them in a different index:

Ingest

Ingest

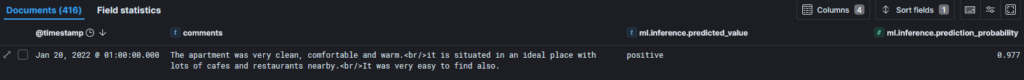

Now, I can simply copy the review.csv into the watched directory and Filebeat will send lines to Elasticsearch. After few minutes, I can see the first results:

Or, a considered negative example with the associated prediction rate:

What Next?

What Next?

Of course, we could try another model to compare results.

If you did not noticed, this was also a first step into Extract-transform-load topic (ETL).

L’article Elasticsearch, Ingest Pipeline and Machine Learning est apparu en premier sur dbi Blog.

Learning Azure by having fun with ChatGPT

Are you studying for an Azure certification? You may feel at some point you need a break from the dry and factual technical information. I’m in your shoes right now and I have done some experiments with ChatGPT. I’m not using it to write my text but to learn by having fun! It is very good at telling stories so why not use that for what interests me right now? Azure architecture!

In this blog post I’m going to show you how I use it, it may give you some ideas to make your learning a little more spicy!

Prompt for preparing ChatGPT with Azure

Prompt for preparing ChatGPT with Azure

First you’ll need to prepare ChatGPT by explaining what you want. Set the framework and limit its responses otherwise it is too chatty. As I like science fiction (sci-fi), I’m interested in a futuristic story but you could choose any style you like. My first prompt and ChatGPT response is shown below:

Act as an Azure architect. You are in charge of educating me, your student, to become an Azure architect too. You will respond to my questions by using story telling to explain each concepts requested.

I want you to:

– Always tell a simple and futuristic story

– Limit the reply to 100 words

As an Azure architect, imagine Azure as a vast cosmic city. Each service is a towering skyscraper, and we, architects, design the blueprints. Let’s start with Virtual Networks. They’re like the city’s infrastructure, connecting buildings. Subnets divide areas within each building, like floors. Next, VMs are the inhabitants, residing in these skyscrapers, each serving a unique purpose. Azure Storage, a network of secure vaults, safeguards their belongings. With Azure Load Balancer as traffic controllers, the city thrives, ensuring smooth navigation. As architects of this digital metropolis, we sculpt a future where innovation soars amidst the azure skyline.

Not bad! It gives me some key components of an Azure infrastructure and makes some analogies I can easily picture in my head. With this basis, I can now be the hero of this story by asking more questions.

Build up that Azure cosmic city with ChatGPTYou can then ask questions around your area of study (mine is Azure architecture) and stay immersed into that story. For example:

What are the best practice to govern this Azure cosmic city?

In our cosmic city, governance is paramount. Azure Policy acts as the city’s constitution, ensuring compliance with regulations. Role-Based Access Control (RBAC) assigns roles to citizens, granting appropriate permissions. Azure Resource Manager templates are like city blueprints, ensuring consistency in construction. Tags label buildings for easy identification, while Azure Monitor keeps a watchful eye on city activities. With these best practices, our cosmic city thrives, ensuring order, security, and harmony amidst the azure skyline.It gives you the key elements of Azure governance by continuing its story. You can go on like this back and forth by throwing in any chapter of your course. You can focus on a specific use case as well and see how it integrates with this story:

How can I migrate my SQL Server database on-premises to this city?

Imagine your SQL Server database as a cozy cottage in the countryside. To migrate to our cosmic city, Azure, we’ll use Azure Database Migration Service, like a futuristic moving company. It carefully transports your data to Azure SQL Database, a sleek skyscraper in our city. With minimal downtime, your database seamlessly transitions to its new home, ready to thrive amidst the azure skyline. Just sit back, relax, and watch as your data becomes part of the bustling digital metropolis.How do I ensure my database is highly resilient in this cosmic city?

In our cosmic city, resilience is key. Azure offers a robust solution: Azure SQL Database with built-in redundancy, akin to a fortress with multiple layers of defense. Automatic backups act as a safety net, ensuring data is never lost. Geo-replication creates duplicates across regions, safeguarding against disasters. With Azure SQL Database’s high availability and disaster recovery features, your database thrives amidst the azure skyline, resilient against any digital storm.I don’t know if it works for you but I find it fascinating I can make ChatGPT talk endlessly and respond to any questions I have about Azure in a sci-fi story!

Dive into an Azure componentAs usual with ChatGPT you need to drive the discussion to get the most of it. Let’s see what it has to say about Azure AD (I use the legacy terminology as ChatGPT v3.5 doesn’t know yet Microsoft Entra ID):

What and where would be the Azure AD in this city?

In our bustling city, Azure AD is the central hub, akin to City Hall. It’s where identities are registered, like citizens in our digital society. Located at the heart of the city, Azure AD ensures secure access to every digital service and resource, much like City Hall grants access to essential services. From authentication to authorization, Azure AD governs the city’s digital identities, ensuring a seamless and secure experience for all inhabitants amidst the azure skyline.How would Azure AD conditional access be implemented in this city?

In our city, Azure AD Conditional Access acts as the city’s security checkpoints. Picture futuristic gates at key entry points, only granting access to authorized individuals based on specific conditions. Want to enter the financial district skyscraper? You’ll need more than just a pass; perhaps a biometric scan during business hours. Conditional Access ensures only the right people, with the right permissions, access sensitive areas of our digital city. It’s our shield against cyber threats amidst the azure skyline, ensuring a safe and secure digital environment for all inhabitants.How would I connect from my Active Directory in the countryside to this Azure AD in the cloud?

In the countryside, your Active Directory (AD) is like a quaint village square, bustling with local identities. To connect to Azure AD in our cosmic cloud city, envision a digital bridge spanning the distance between village and skyline. Azure AD Connect is your trusty guide, seamlessly synchronizing identities from countryside AD to Azure AD. With this bridge in place, villagers can access digital services in the cloud using their familiar countryside credentials. Just like that, the countryside becomes an integral part of our digital metropolis, united under the azure skyline.You can then piece by piece for each component create a complete city in the countryside (OnPrem) and in the cloud and illustrate their interaction. We could completely gamify this! SimCity anyone?

LimitationsSome general concepts are more difficult to put into a visual story as for example the Microsoft Cloud Adoption Framework:

What would be the Microsoft Cloud Adoption Framework for Azure in this city?

In our dynamic metropolis, the Microsoft Cloud Adoption Framework for Azure is our roadmap to success. Like a futuristic GPS, it guides us through the twists and turns of digital transformation. Envisioning helps us set our destination, planning ensures we choose the best route, and readiness prepares us for the journey ahead. Migration helps us seamlessly transition, while innovation propels us forward. With governance as our compass and optimization as our fuel, we navigate the ever-changing landscape, building a future where our digital city thrives amidst the azure skyline. Ready to embark on this transformative journey?I don’t find it fantastic as we recognize the empty blabbering of ChatGPT. We would need to be more specific to get something more interesting.

Wrap upYou can see how by relaxing from your studies, you can still continue to learn by having fun in an imaginary world. You could totally convert all this into visual notes that will help you when you renew your certifications. That is something I’m starting to explore.

This is just a glimpse of how you could use ChatGPT in your journey to learn Azure or anything else. Brainstorm any concept, service or component you are learning and see how it integrates into a visual story to get a high-level picture. Let me know if your are using ChatGPT that way for learning and what is the world you are building for it!

L’article Learning Azure by having fun with ChatGPT est apparu en premier sur dbi Blog.

Monitor Elasticsearch Cluster with Zabbix

Setting up Zabbix monitoring over an Elasticsearch cluster is quiet easy as it does not require an agent install. As a matter a fact, the official template uses the Elastic REST API. Zabbix server itself will trigger these requests.

In this blog post, I will quick explain how to setup Elasticsearch cluster, then how easy the Zabbix setup is and list possible issues you might encounter.

Elastic Cluster SetupI will not go too much in detail as David covered already many topics around ELK. Anyway, would you need any help to install, tune or monitor your ELK cluster fell free to contact us.

My 3 virtual machines are provisioned with YaK on OCI. Then, I install the rpm on all 3 nodes.

After starting first node service, I am generating an enrollment token with this command:

/usr/share/elasticsearch/bin/elasticsearch-create-enrollment-token -node

This return a long string which I will need to pass on node 2 and 3 of the cluster (before starting anything):

/usr/share/elasticsearch/bin/elasticsearch-reconfigure-node --enrollment-token <...>

Output will look like that:

This node will be reconfigured to join an existing cluster, using the enrollment token that you provided.

This operation will overwrite the existing configuration. Specifically:

- Security auto configuration will be removed from elasticsearch.yml

- The [certs] config directory will be removed

- Security auto configuration related secure settings will be removed from the elasticsearch.keystore

Do you want to continue with the reconfiguration process [y/N]

After confirming with a y, we are almost ready to start. First, we must update ES configuration file (ie. /etc/elasticsearch/elasticsearch.yml).

- Add IP of first node (only for first boot strapped) in

cluster.initial_master_nodes: ["10.0.0.x"] - Set listening IP of the inter-node trafic (to do on node 1 as well):

transport.host: 0.0.0.0 - Set list of master eligible nodes:

discovery.seed_hosts: ["10.0.0.x:9300"]

Now, we are ready to start node 2 and 3.

Let’s check the health of our cluster:

curl -s https://localhost:9200/_cluster/health -k -u elastic:password | jq

If you forgot elastic password, you can reset it with this command:

/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic

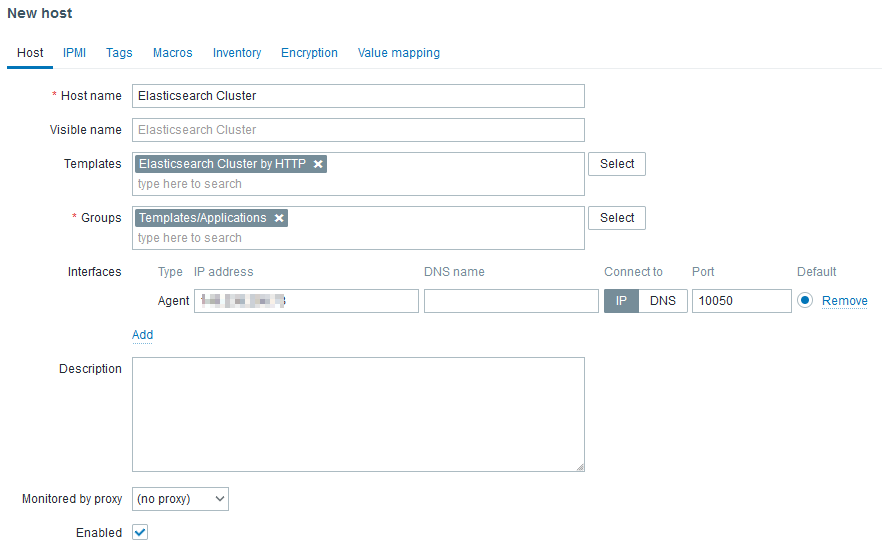

With latest Elasticsearch release, security has drastically increased as SSL communication became the standard. Nevertheless, default MACROS values of the template did not. Thus, we have to customize the followings:

- {$ELASTICSEARCH.USERNAME} to elastic

- {$ELASTICSEARCH.PASSWORD} to its password

- {$ELASTICSEARCH.SCHEME} to https

If SELinux is enabled on your Zabbix server, you will need to allow zabbix_server process to send network request. Following command achieves this:

setsebool zabbix_can_network 1

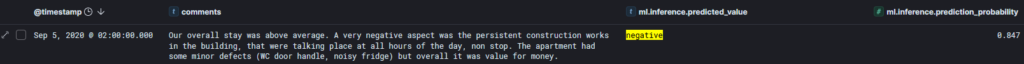

Next, we can create a host in Zabbix UI like that:

The Agent interface is required but will not be used to reach any agent as there are not agent based (passive or active) checks in the linked template. However, http checks uses HOST.CONN MACRO in the URLs. Ideally, the IP should be a virtual IP or a load balanced IP.

Don’t forget to set the MACROS:

After few minutes, and once nodes discovery ran, you should see something like that:

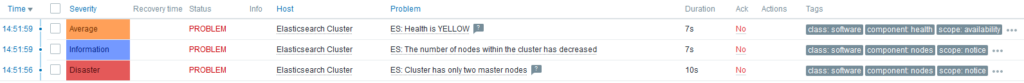

What will happen if one node stops? On Problems tab of Zabbix UI:

After few seconds, I noticed that ES: Health is YELLOW gets resolved on its own. Why? Because shards are re-balanced across running servers.

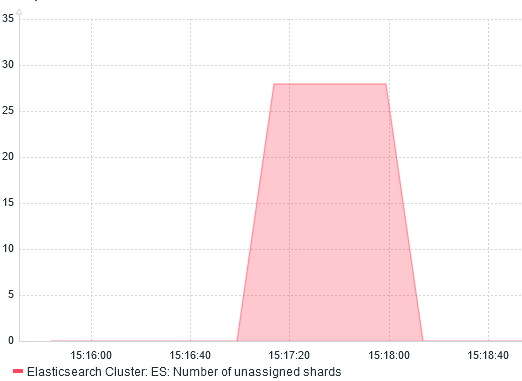

I confirm this by graphing Number of unassigned shards:

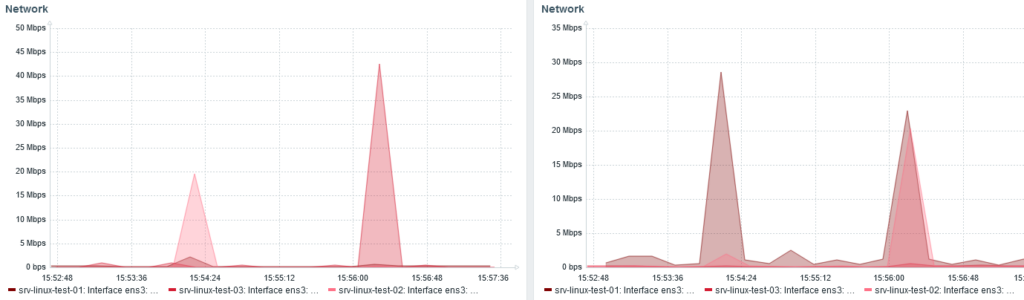

We can also see the re-balancing with the network traffic monitoring:

Received bytes on the left. Sent on the right.

Received bytes on the left. Sent on the right.

Around 15:24, I stopped node 3 and shards were redistributed from node 1 and 2.

When node 3 start, at 15:56, we can see node 1 and 2 (20 Mbps each) send back shards to node 3 (40 Mbps received).

ConclusionWhatever the monitoring tool you are using, it always help to understand what is happening behind the scene.

L’article Monitor Elasticsearch Cluster with Zabbix est apparu en premier sur dbi Blog.

Power BI Report Server: unable to publish a PBIX report

I installed a complete new Power BI Report Server. The server had several network interfaces to be part of several subdomains. In order to access the Power BI Report Server web portal from the different subdomains I defined 3 different HTTPS URL’s in the configuration file and a certificate binding. I used as well a specific active directory service account to start the service. I restarted my Power BI Report Server service checking that the URL reservations were done correctly. I knew that in the past this part could be a source of problems.

Everything seemed to be OK. I tested the accessibility to the Power BI Report Server web portal from the different sub-nets clients and everything was fine.

The next test was the upload of a Power BI report to the web portal. Of course I was sure, having a reports developed with Power BI Desktop RS.

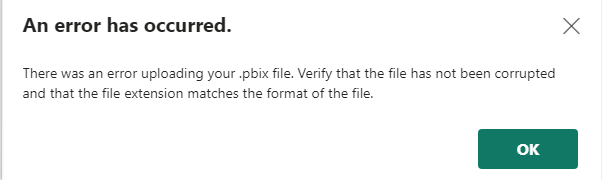

Error raisedAn error was raised when uploading a Power BI report in the web portal.

Trying to publish the report from Power BI Desktop RS was failing as well.

Troubleshouting

Troubleshouting

Report Server log analysis:

I started to analyze the Power BI Report Server logs. For a standard installation they are located in

C:\Program Files\Microsoft Power BI Report Server\PBIRS\LogFiles

In the last RSPowerBI_yyyy_mm_dd_hh_mi_ss.log file written I could find the following error:

Could not start PBIXSystem.Reflection.TargetInvocationException: Exception has been thrown by the target of an invocation. ---> System.Net.HttpListenerException: Access is denied

The error showing an Access denied, the first reaction was to put the service account I used to start the Power BI Report Server in the local Administrators group.

I restarted the service and tried again the publishing of the Power BI report. It worked without issue.

Well, I had a solution, but the it wasn’t an acceptable one. A application service account should not be local admin of a server, it would be a security breach and is not permitted by the security governance.

Based on the information contained in the error message, I could find that is was related to URL reservation, but from the configuration steps, I could not notice any issues.

I analyzed than the list of the reserved URL on the server. Run the following command with elevated permissions to get the list of URL reservation on the server:

Netsh http show urlacl

List of URL reservation found for the user NT SERVICE\PowerBIReportServer:

Reserved URL : http://+:8083/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://sub1.domain.com:443/ReportServerPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://sub2.domain.com:443/ReportServerPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://servername.domain.com.ch:443/PowerBI/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://servername.domain.com.ch:443/wopi/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://sub1.domain.com:443/ReportsPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://sub2.domain.com:443/ReportsPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Reserved URL : https://servername.domain.com.ch:443/ReportsPBIRS/

User: NT SERVICE\PowerBIReportServer

Listen: Yes

Delegate: No

SDDL: D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)

Checking the list I could find:

- the 3 URL’s reserved fro the web service containing the virtual directory I defined ReportServerPBIRS

- the 3 URL’s reserved fro the web portal containing the virtual directory I defined ReportsPBIRS

But I noticed that only 1 URL was reserved for the virtual directories PowerBI and wopi containing the servername.

The 2 others with the subdomains were missing.

SolutionI decided to reserve the URL for PowerBI and wopi virtual directory on the 2 subdomains running the following command with elevated permissions.

Be sure that the SDDL ID used is the one you find in the rsreportserver.config file.

netsh http add urlacl URL=sub1.domain.com:443/PowerBI/ user="NT SERVICE\SQLServerReportingServices" SDDL="D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)"

netsh http add urlacl URL=sub2.domain.com:443/PowerBI/ user="NT SERVICE\SQLServerReportingServices" SDDL="D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)"

netsh http add urlacl URL=sub1.domain.com:443/wopi/ user="NT SERVICE\SQLServerReportingServices" SDDL="D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)"

netsh http add urlacl URL=sub2.domain.com:443/wopi// user="NT SERVICE\SQLServerReportingServices" SDDL="D:(A;;GX;;;S-1-5-80-1730998386-2757299892-37364343-1607169425-3512908663)"

Restart the Power BI Report Server service

You can notice that the error in the latest RSPowerBI_yyyy_mm_dd_hh_mi_ss.log file desappeared.

I tested the publishing of a Power BI report again, and it worked.

I hope that this reading has helped to solve your problem.

L’article Power BI Report Server: unable to publish a PBIX report est apparu en premier sur dbi Blog.

PostgreSQL 17: pg_buffercache_evict()

In PostgreSQL up to version 16, there is no way to evict the buffer cache except by restarting the instance. In Oracle you can do that since ages with “alter system flush buffer cache“, but not in PostgreSQL. This will change when PostgreSQL 17 will be released later this year. Of course, flushing the buffer cache is nothing you’d usually like to do in production, but this can be very handy for educational or debugging purposes. This is also the reason why this is intended to be a developer feature.

For getting access to the pg_buffercache_evict function you need to install the pg_buffercache extension as the function is designed to work over the pg_buffercache view:

postgres=# select version();

version

-------------------------------------------------------------------

PostgreSQL 17devel on x86_64-linux, compiled by gcc-7.5.0, 64-bit

(1 row)

postgres=# create extension pg_buffercache;

CREATE EXTENSION

postgres=# \dx

List of installed extensions

Name | Version | Schema | Description

----------------+---------+------------+---------------------------------

pg_buffercache | 1.5 | public | examine the shared buffer cache

plpgsql | 1.0 | pg_catalog | PL/pgSQL procedural language

(2 rows)

postgres=# \d pg_buffercache

View "public.pg_buffercache"

Column | Type | Collation | Nullable | Default

------------------+----------+-----------+----------+---------

bufferid | integer | | |

relfilenode | oid | | |

reltablespace | oid | | |

reldatabase | oid | | |

relforknumber | smallint | | |

relblocknumber | bigint | | |

isdirty | boolean | | |

usagecount | smallint | | |

pinning_backends | integer | | |

Once the extension is in place, the function is there as well:

postgres=# \dfS *evict*

List of functions

Schema | Name | Result data type | Argument data types | Type

--------+----------------------+------------------+---------------------+------

public | pg_buffercache_evict | boolean | integer | func

(1 row)

To load something into the buffer cache we’ll make use of the pre_warm extension and completely load the table we’ll create afterwards:

postgres=# create extension pg_prewarm;

CREATE EXTENSION

postgres=# create table t ( a int, b text );

CREATE TABLE

postgres=# insert into t select i, i::text from generate_series(1,10000) i;

INSERT 0 10000

postgres=# select pg_prewarm ( 't', 'buffer', 'main', null, null );

pg_prewarm

------------

54

(1 row)

postgres=# select pg_relation_filepath('t');

pg_relation_filepath

----------------------

base/5/16401

(1 row)

postgres=# select count(*) from pg_buffercache where relfilenode = 16401;

count

-------

58

(1 row)

If you wonder why there are 58 blocks cached in the buffer cache but we only loaded 54, this is because of the visibility and free space map:

postgres=# select relforknumber from pg_buffercache where relfilenode = 16401 and relforknumber != 0;

relforknumber

---------------

1

1

1

2

(4 rows)

Using the new pg_buffercache_evict() function we are now able to completely evict the buffers of that table from the cache, which results in exactly 58 blocks to be evicted:

postgres=# select pg_buffercache_evict(bufferid) from pg_buffercache where relfilenode = 16401;

pg_buffercache_evict

----------------------

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

t

(58 rows)

Cross-checking this confirms, that all the blocks are gone:

postgres=# select count(*) from pg_buffercache where relfilenode = 16401;

count

-------

0

(1 row)

Nice, thanks to all involved.

L’article PostgreSQL 17: pg_buffercache_evict() est apparu en premier sur dbi Blog.

Apache httpd Tuning and Monitoring with Zabbix

There is no tuning possible without a proper monitoring in place to measure the impact of any changes. Thus, before trying to tune an Apache httpd server, I will explain how to monitor it with Zabbix.

Setup Zabbix MonitoringApache httpd template provided by Zabbix uses mod_status which provides metrics about load, processes and connections.

Before enabling this module, we must ensure it is present. httpd -M 2>/dev/null | grep status_module command will tell you so. Next, we can extend configuration by creating a file in /etc/httpd/conf.d:

<Location "/server-status">

SetHandler server-status

</Location>After a configuration reload, we should be able to access the URL http://<IP>/server-status?auto.

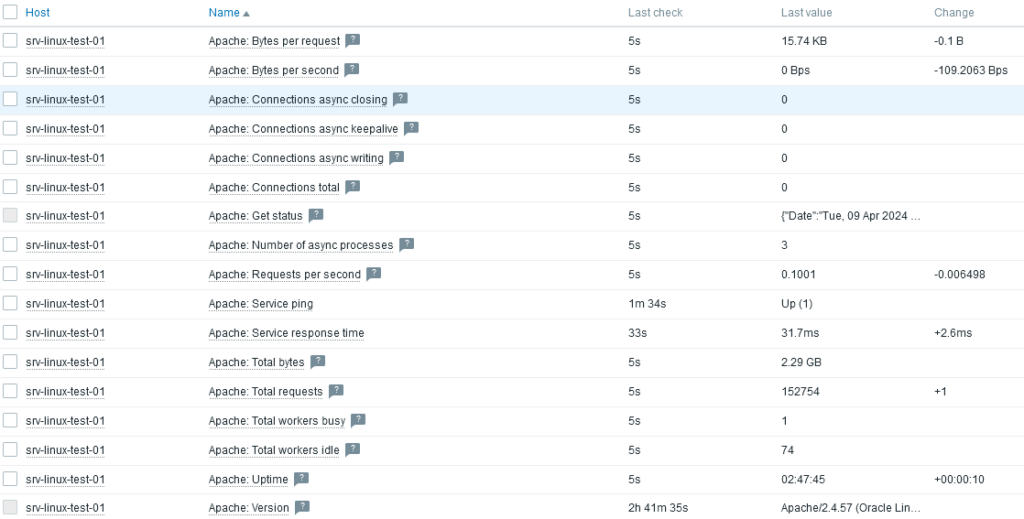

Finally, we can link the template to the host and see that data are collected:

Tuning

Tuning

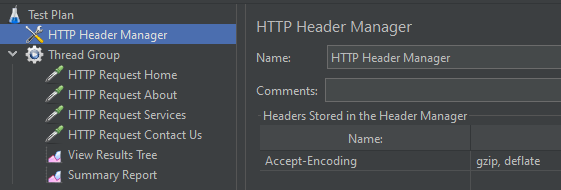

I deployed a simple static web site to the Apache httpd server. To load test that web site, nothing better than JMeter. The load test scenario is simply requesting Home, About, Services and Contact Us pages and retrieve all embedded resources during 2 minutes with 100 threads (ie. users).

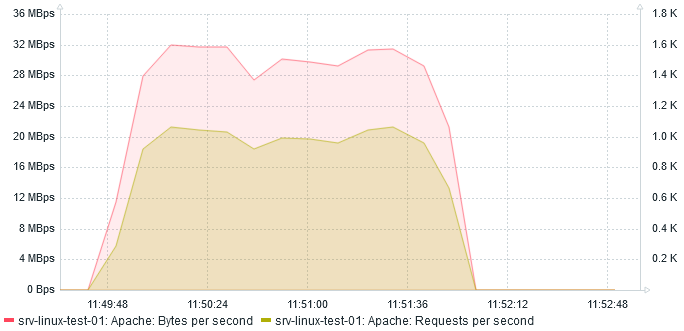

Here are the performances on requests per seconds (right scale) and bytes per seconds (left scale):

At most, server serves 560 req/s at 35 MBps.

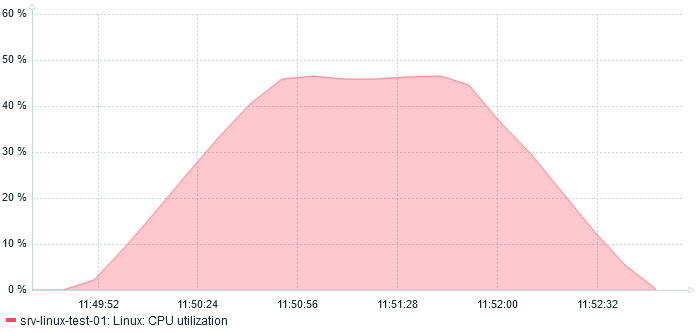

And regarding CPU usage, it almost reaches 10%:

Compression

Compression

Without any additional headers, Apache httpd will consider the client (here JMeter) does not support gzip. Fortunately, it is possible to set HTTP Header in JMeter. I add it at the top of the test plan so that it will apply to all HTTP Requests below:

Note that I enabled mod_deflate on Apache side.

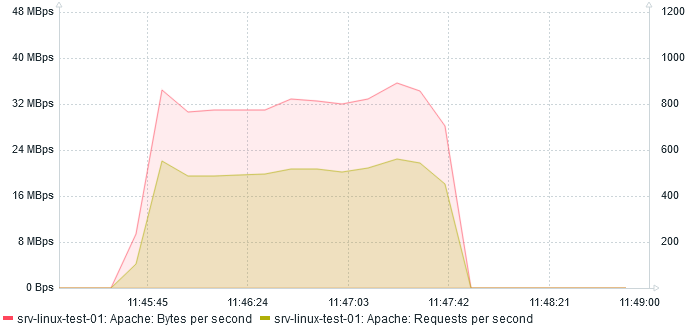

Let’s run another load test and compare the results!

After two minutes, here is what I see:

The amount of Mbps reduced to 32 which is expected as we are compressing. The amount of req/s increased by almost 100% to 1000 req/s !

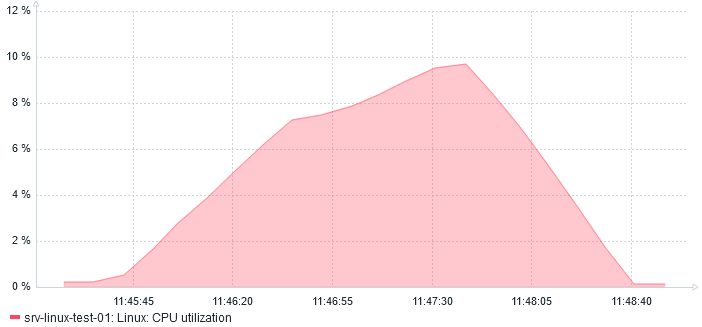

On the CPU side, we also see a huge increase:

45% CPU usage

45% CPU usage

This is also more or else expected as compression requires computing.

And NowThe deployed static web site does not have any forms which would require client side compression. That will be a subject for another blog. Also, I can compare with Nginx.

L’article Apache httpd Tuning and Monitoring with Zabbix est apparu en premier sur dbi Blog.

ODA X10-L storage configuration is different from what you may expect

Oracle Database Appliance X10 lineup is available since September 2023. Compared to X9-2 lineup, biggest changes are the AMD Epyc processors replacing Intel Xeons, and new license model regarding Standard Edition 2, clarified by Oracle several weeks ago. Apart from these new things, the models are rather similar to previous ones, with the Small model for basic needs, a HA model with RAC and high-capacity storage for big critical databases, and a much more popular Large model for most of the needs.

2 kinds of disks inside the ODA X10-LThe ODA I’ve worked on is a X10-L with 2x disk expansions, meaning that I have the 2x 6.8TB disks from the base configuration, plus 4x 6.8TB additional disks. The first 4 disks are classic disks visible on the front panel of the ODA. As there are only 4 bays in the front, the other disks are internal, called AIC for Add-In Card (PCIe). You can have up to 3 disk expansions, meaning 4x disks in the front and 4x AIC disks inside the server. You should know that only the front disks are hot swappable. The other disks being PCIe cards, you will need to shut down the server and open its cover to remove, add or replace a disk. 6.8TB is the RAW capacity, consider that real capacity is something like 6.2TB, but usable capacity will be lower as you will need to use ASM redundancy to protect your blocks. In the ODA documentation, you will find the usable capacity for each disk configuration.

2 AIC disks inside an ODA X10-L. The first 4 disks are in the front.

First contact with X10-L – using odacliodacli describe-system is very useful for an overview of the ODA you’re connected to:

odacli describe-system

Appliance Information

----------------------------------------------------------------

ID: 3fcd1093-ea74-4f41-baa1-f325b469a3e1

Platform: X10-2L

Data Disk Count: 10

CPU Core Count: 4

Created: January 10, 2024 2:26:43 PM CET

System Information

----------------------------------------------------------------

Name: dc1oda002

Domain Name: ad.dbiblogs.ch

Time Zone: Europe/Zurich

DB Edition: EE

DNS Servers: 10.100.50.8 10.100.50.9

NTP Servers: 10.100.50.8 10.100.50.9

Disk Group Information

----------------------------------------------------------------

DG Name Redundancy Percentage

------------------------- ------------------------- ------------

DATA NORMAL 85

RECO NORMAL 15Data Disk Count is not what I’ve expected. This is normally the number of DATA disks, it should be 6 on this ODA, not 10.

Let’s do a show disk with odaadmcli:

odaadmcli show disk

NAME PATH TYPE STATE STATE_DETAILS

pd_00 /dev/nvme0n1 NVD ONLINE Good

pd_01 /dev/nvme1n1 NVD ONLINE Good

pd_02 /dev/nvme3n1 NVD ONLINE Good

pd_03 /dev/nvme2n1 NVD ONLINE Good

pd_04_c1 /dev/nvme8n1 NVD ONLINE Good

pd_04_c2 /dev/nvme9n1 NVD ONLINE Good

pd_05_c1 /dev/nvme6n1 NVD ONLINE Good

pd_05_c2 /dev/nvme7n1 NVD ONLINE GoodOK, this command only displays the DATA disks, so the system disks are not in this list, but there are still 8 disks and not 6.

Let’s have a look on the system side.

First contact with X10-L – using system commandsWhat is detected by the OS?

lsblk | grep disk

nvme9n1 259:0 0 3.1T 0 disk

nvme6n1 259:6 0 3.1T 0 disk

nvme8n1 259:12 0 3.1T 0 disk

nvme7n1 259:18 0 3.1T 0 disk

nvme4n1 259:24 0 447.1G 0 disk

nvme5n1 259:25 0 447.1G 0 disk

nvme3n1 259:26 0 6.2T 0 disk

nvme0n1 259:27 0 6.2T 0 disk

nvme1n1 259:28 0 6.2T 0 disk

nvme2n1 259:29 0 6.2T 0 disk

asm/acfsclone-242 250:123905 0 150G 0 disk /opt/oracle/oak/pkgrepos/orapkgs/clones

asm/commonstore-242 250:123906 0 5G 0 disk /opt/oracle/dcs/commonstore

asm/odabase_n0-242 250:123907 0 40G 0 disk /u01/app/odaorabase0

asm/orahome_sh-242 250:123908 0 80G 0 disk /u01/app/odaorahome

This is rather strange. I can see 10 disks, the 2x 450GB disks are for the system (and normally not considered as DATA disks by odacli), I can also find 4x 6.2TB disks. But instead of having 2x additional 6.2TB disks, I have 4x 3.1TB disks. The overall capacity is OK, 37.2TB, but it’s different compared to previous ODA generations.

Let’s confirm this with fdisk:

fdisk -l /dev/nvme0n1

Disk /dev/nvme0n1: 6.2 TiB, 6801330364416 bytes, 13283848368 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: E20D9013-1982-4F66-B7A2-5FE0B1BC8F74

Device Start End Sectors Size Type

/dev/nvme0n1p1 4096 1328386047 1328381952 633.4G Linux filesystem

/dev/nvme0n1p2 1328386048 2656767999 1328381952 633.4G Linux filesystem

/dev/nvme0n1p3 2656768000 3985149951 1328381952 633.4G Linux filesystem

/dev/nvme0n1p4 3985149952 5313531903 1328381952 633.4G Linux filesystem

/dev/nvme0n1p5 5313531904 6641913855 1328381952 633.4G Linux filesystem

/dev/nvme0n1p6 6641913856 7970295807 1328381952 633.4G Linux filesystem

/dev/nvme0n1p7 7970295808 9298677759 1328381952 633.4G Linux filesystem

/dev/nvme0n1p8 9298677760 10627059711 1328381952 633.4G Linux filesystem

/dev/nvme0n1p9 10627059712 11955441663 1328381952 633.4G Linux filesystem

/dev/nvme0n1p10 11955441664 13283823615 1328381952 633.4G Linux filesystem

fdisk -l /dev/nvme8n1

Disk /dev/nvme8n1: 3.1 TiB, 3400670601216 bytes, 6641934768 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

Disklabel type: gpt

Disk identifier: A3086CB0-31EE-4F78-A6A6-47D53149FDAE

Device Start End Sectors Size Type

/dev/nvme8n1p1 4096 1328386047 1328381952 633.4G Linux filesystem

/dev/nvme8n1p2 1328386048 2656767999 1328381952 633.4G Linux filesystem

/dev/nvme8n1p3 2656768000 3985149951 1328381952 633.4G Linux filesystem

/dev/nvme8n1p4 3985149952 5313531903 1328381952 633.4G Linux filesystem

/dev/nvme8n1p5 5313531904 6641913855 1328381952 633.4G Linux filesystemOK, the 6.2TB disks are split in 10 partitions, and the 3.1TB disks are split in 5 partitions. It makes sense because ASM needs partitions of the same size inside a diskgroup.

First contact with X10-L – using ASMNow let’s have a look within ASM, the most important thing being that ASM is able to manage the storage correctly:

su - grid

sqlplus / as sysasm